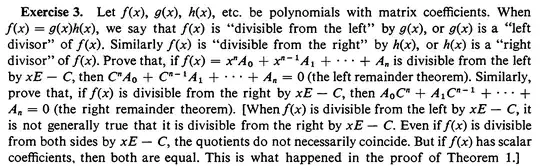

I am reading "Linear Algebra" by Ichiro Satake.

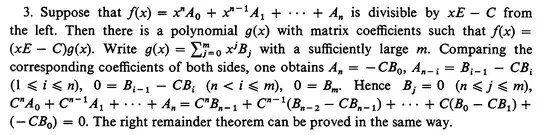

The following are Exercise 3 and the author's answer to Exercise 3.

I don't understand why the author's answer is long like the following.

My answer is very short:

Let $x^nA_0+x^{n-1}A_1+\cdots+A_n=(xE-C)(x^{n-1}B_0+x^{n-2}B_1+\cdots+B_{n-1})$.

Since $CC^i=C^iC$ for any $i\in\{0,1,2,\dots\}$, $$O=(CE-C)(C^{n-1}B_0+C^{n-2}B_1+\cdots+B_{n-1})\\=C^nB_0+(C^{n-1}B_1-CC^{n-1}B_0)+\cdots+(CB_{n-1}-CCB_{n-2})-CB_{n-1}\\=C^nB_0+(C^{n-1}B_1-C^{n-1}CB_0)+\cdots+(CB_{n-1}-CCB_{n-2})-CB_{n-1}\\=C^nB_0+C^{n-1}(B_1-CB_0)+\cdots+C(B_{n-1}-CB_{n-2})-CB_{n-1}\\=C^nA_0+C^{n-1}A_1+\cdots+A_n.$$

Please compare the above equations with the following equations:

Since $xC^i=C^ix$ for any $i\in\{0,1,2,\dots\}$, $$(xE-C)(x^{n-1}B_0+x^{n-2}B_1+\cdots+B_{n-1})\\=x^nB_0+(x^{n-1}B_1-Cx^{n-1}B_0)+\cdots+(xB_{n-1}-CxB_{n-2})-CB_{n-1}\\=x^nB_0+(x^{n-1}B_1-x^{n-1}CB_0)+\cdots+(xB_{n-1}-xCB_{n-2})-CB_{n-1}\\=x^nB_0+x^{n-1}(B_1-CB_0)+\cdots+x(B_{n-1}-CB_{n-2})-CB_{n-1}\\=x^nA_0+x^{n-1}A_1+\cdots+A_n.$$

Honestly speaking, I think $C^nA_0+C^{n-1}A_1+\cdots+A_n=O$ is an obvious equation.

But I am afraid if my answer is wrong.

Is my answer wrong or bad?