There is a package named segmented in R. Is there a similar package in python?

3 Answers

No, currently there isn't a package in Python that does segmented linear regression as thoroughly as those in R (e.g. R packages listed in this blog post). Alternatively, you can use a Bayesian Markov Chain Monte Carlo algorithm in Python to create your segmented model.

Segmented linear regression, as implemented by all the R packages in the above link, doesn't permit extra parameter constraints (i.e. priors), and because these packages take a frequentist approach, the resulting model doesn't give you probability distributions for the model parameters (i.e. breakpoints, slopes, etc). Defining a segmented model in statsmodels, which is frequentist, is even more restrictive because the model requires a fixed x-coordinate breakpoint.

You can design a segmented model in Python using the Bayesian Markov Chain Monte Carlo algorithm emcee. Jake Vanderplas wrote a useful blog post and paper for how to implement emcee with comparisons to PyMC and PyStan.

Example:

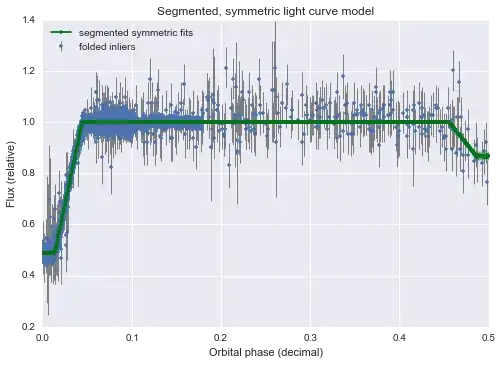

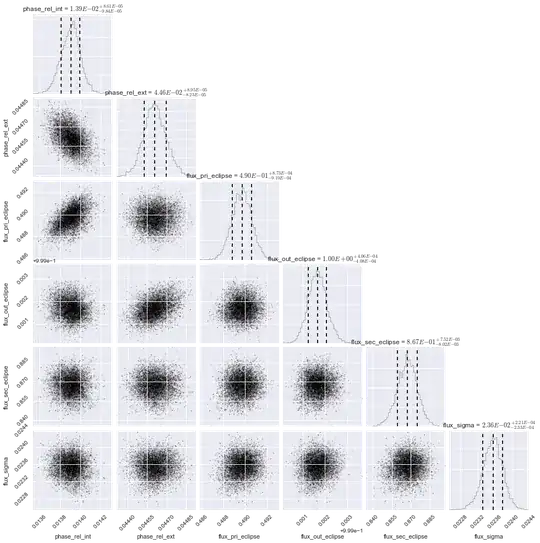

- Segmented model with data:

- Probability distributions of fit parameters:

- 311

- 1

- 5

Yes, there is now a Python package called piecewise-regression that implements similar tools to the segmented R package, using the same algorithm.

You can get it from pip

pip install piecewise-regression

There are full docs, a github repo, and an accompanying paper.

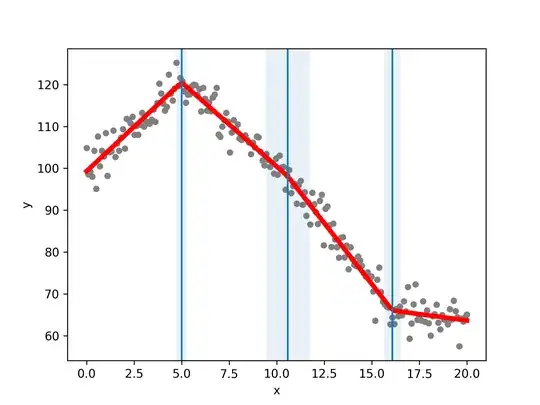

Here's an example fit:

It's very easy-to-use, code examples here.

Full disclosure: I wrote the package.

- 161

- 3

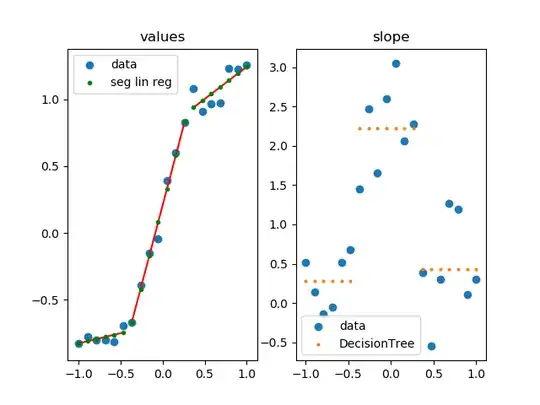

This is an implementation of my own.

import numpy as np

import matplotlib.pylab as plt

from sklearn.tree import DecisionTreeRegressor

from sklearn.linear_model import LinearRegression

# parameters for setup

n_data = 20

# segmented linear regression parameters

n_seg = 3

np.random.seed(0)

fig, (ax0, ax1) = plt.subplots(1, 2)

# example 1

#xs = np.sort(np.random.rand(n_data))

#ys = np.random.rand(n_data) * .3 + np.tanh(5* (xs -.5))

# example 2

xs = np.linspace(-1, 1, 20)

ys = np.random.rand(n_data) * .3 + np.tanh(3*xs)

dys = np.gradient(ys, xs)

rgr = DecisionTreeRegressor(max_leaf_nodes=n_seg)

rgr.fit(xs.reshape(-1, 1), dys.reshape(-1, 1))

dys_dt = rgr.predict(xs.reshape(-1, 1)).flatten()

ys_sl = np.ones(len(xs)) * np.nan

for y in np.unique(dys_dt):

msk = dys_dt == y

lin_reg = LinearRegression()

lin_reg.fit(xs[msk].reshape(-1, 1), ys[msk].reshape(-1, 1))

ys_sl[msk] = lin_reg.predict(xs[msk].reshape(-1, 1)).flatten()

ax0.plot([xs[msk][0], xs[msk][-1]],

[ys_sl[msk][0], ys_sl[msk][-1]],

color='r', zorder=1)

ax0.set_title('values')

ax0.scatter(xs, ys, label='data')

ax0.scatter(xs, ys_sl, s=3**2, label='seg lin reg', color='g', zorder=5)

ax0.legend()

ax1.set_title('slope')

ax1.scatter(xs, dys, label='data')

ax1.scatter(xs, dys_dt, label='DecisionTree', s=2**2)

ax1.legend()

plt.show()

- 131

- 4