Consider two vector variables $x, y \in \mathbb{R}^n$. Both $x$ and $y$ are not given. We want to determine the region of $x$ that satisfies the constraints:

$$ \begin{cases} \|x - y\|_{\infty} \leq a, \\ \|y\|_{1} \leq b, \end{cases} $$ where $a, b \in \mathbb{R}^+$, and $\|\cdot\|_1$ and $\|\cdot\|_{\infty}$ denote the $L_1$ and $L_{\infty}$ norm.

My goal is to find a more explicit way to express the region of $x$ without relying on another vector $y$.

My thoughts:

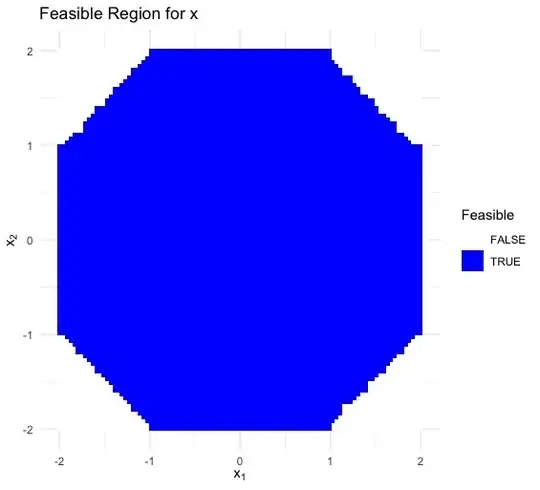

I did use R to solve this problem with $n=2, a=1, b=1$, and made the following graph in 2D space:

The first constraint is equivalent to $$ -a \leq x_i - y_i \leq a \quad \forall i. $$ Rearranging this, we have that $$ x_i - a \leq y_i \leq x_i + a \quad \forall i, $$ which implies that $$ |y_i| \leq \min(|x_i - a|, |x_i + a|). $$ In order to satisfy $$ \sum_{i=1}^n |y_i| \leq b, $$ I just have the following $$ \sum_{i=1}^n \min(|x_i - a|, |x_i + a|) \leq b. $$ As @RobPratt pointed out, this solution is wrong as it excludes $(x_1, x_2) = (0,0)$.