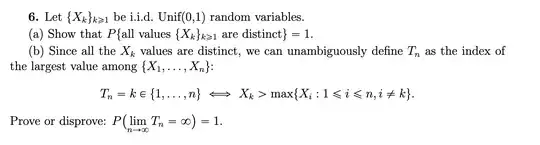

Hello, I am trying to solve the following problem. For part (a) my idea is as follows: $P\{$all values are distict$\}=1-P\{$there exist $i\ne j$ so that $X_i=X_j\}=P\cup_{i\ne j}\{X_i=X_j\}\leq \sum_{i\ne j}P(X_i=X_j).$ So, it suffices to show that $P(X_i=X_j)$ is $0$ for any $i\ne j$. To do this I was going to try taking the convolution to find the distribution of $X_i-X_j$, then if we can show it is uniformly distributed we know any single point will have probability 0. Is this reasoning accurate?

For part (b), I don't know how to approach. I want to say that it should be true, since density ensures that we can always find some bigger number less than 1, and with enough trials we will reach such a value eventually. However, I don't know how to formalize this in the structure given. Any guidance would be very much appreciated