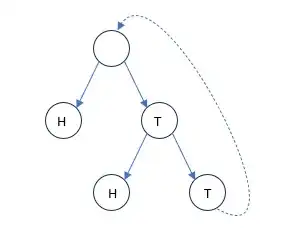

Problem Statement: Two players take turns flipping a fair coin. The first player to get heads wins the game. What is the probability that the player taking the first turn will win the game?

Analysis: Let $x$ be the probability that the next player to flip the coin will win the game. Then $x$ satisfies the equation:

$$x=\frac12\times1+\frac12\times(1-x)$$

since $\frac12$ of the time the player gets heads and wins the game immediately, and $\frac12$ of the time the player gets tails and the opponent has a probability of $1 - x$ of losing the game. The only solution to the equation is $x = \frac23$, and hence the probability that the player taking the first turn will win the game is $\frac23$. I have also verified this result empirically.

Question: Which tools can I use to solve the above and similar problems methodically and rigorously? The main issue I have with the approach above is that:

- I don't know how to methodically produce such equations, and hence for more complicated scenarios I have a low level of certainty that they are correct, and

- the method as stated above seems unsound. The fact that the answer satisfies an equation does not immediately imply that a solution to the equation is the answer. In particular, for more complicated scenarios I sometimes get equations which have several solutions and have to resort to simulation to find which one is the right answer.

Thank you!