[This is a very heuristic explanation of KKT conditions.]

Ref: “Foundations of Applied Mathematics, Vol 2” by Humpherys, Jarvis.

Consider the optimization problem

$${ {\begin{align} &\, \text{minimize } \quad f(x) \\ &\, \text{subject to } \quad G(x) \preceq 0, \, \, H(x) = 0 \end{align}} }$$

where ${ f : \mathbb{R} ^n \longrightarrow \mathbb{R} }$ and ${ G : \mathbb{R} ^n \longrightarrow \mathbb{R} ^m, }$ ${ H : \mathbb{R} ^n \longrightarrow \mathbb{R} ^{\ell} }$ are smooth functions.

Let ${ x ^{\ast} }$ be a local minimizer of ${ f(x) }$ under the constraints ${ G(x) \preceq 0, }$ ${ H(x) = 0 . }$

What are the conditions which ${ x ^{\ast} }$ must satisfy?

For any feasible point ${ x \in \mathscr{F} }$ we can consider the index set of binding constraints

$${ J(x) := \lbrace j : g _j (x) = 0 \rbrace }$$

and the locus of binding constraints

$${ \widetilde{\mathscr{F}}(x) := \lbrace y : H(y) = 0, \, \, g _j (y) = 0 \text{ for all } j \in J(x) \rbrace . }$$

Note that locally near ${ x ^{\ast} , }$ the nonbinding constraints

$${ g _j (y) < 0, \quad \text{ for all } \, \, j \in [m] \setminus J(x ^{\ast}) }$$

are automatically satisfied.

Hence we can focus on ${ \widetilde{\mathscr{F}}(x ^{\ast}) , }$ and the fact that ${ x ^{\ast} }$ is a local minimizer of ${ f }$ over ${ \widetilde{\mathscr{F}}(x ^{\ast}) . }$

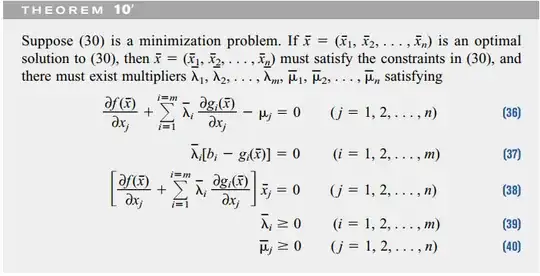

Thm [KKT conditions]

Suppose the local minimizer ${ x ^{\ast} }$ is also a regular point of ${ \widetilde{\mathscr{F}}(x ^{\ast}) . }$ That is, the collection of gradients

$${ (\nabla h _i (x ^{\ast})) _{i=1} ^{\ell}, \quad (\nabla g _j (x ^{\ast})) _{j \in J(x ^{\ast})} }$$

are linearly independent. (Note that this is generically true).

Then there exist ${ \lambda ^{\ast} \in \mathbb{R} ^{\ell} , }$ ${ \mu ^{\ast} \in \mathbb{R} ^m }$ such that

${ Df(x ^{\ast}) + (\lambda ^{\ast}) ^{T} DH(x ^{\ast}) + (\mu ^{\ast}) ^T DG(x ^{\ast}) = 0. }$

${ \mu ^{\ast} \succeq 0 . }$

${ \mu _j ^{\ast} g _j (x ^{\ast}) = 0 }$ for all ${ j \in [m] . }$

Pf: Note that ${ x ^{\ast} }$ is a local minimizer of ${ f }$ over ${ \widetilde{\mathscr{F}}(x ^{\ast}) . }$ Note that ${ x ^{\ast} }$ is a regular point of ${ \widetilde{\mathscr{F}}(x ^{\ast}) . }$ Hence by classical Lagrange multipliers, there exist ${ \lambda ^{\ast} \in \mathbb{R} ^{\ell} }$ and ${ (\mu _j ^{\ast}) _{j \in J(x ^{\ast})} }$ such that

$${ D f(x ^{\ast}) + \sum _{i = 1} ^{\ell} \lambda _i ^{\ast} D h _i (x ^{\ast}) + \sum _{j \in J(x ^{\ast})} \mu _j ^{\ast} D g _j (x ^{\ast}) = 0 . }$$

Define ${ \mu _j ^{\ast} := 0 }$ for ${ j \in [m] \setminus J(x ^{\ast}) . }$ Note that this gives a vector ${ \mu ^{\ast} \in \mathbb{R} ^m . }$

Note that by definition ${ \mu _j ^{\ast} = 0 }$ for ${ j \in [m] \setminus J(x ^{\ast}) ,}$ and ${ g _j (x ^{\ast}) = 0 }$ for ${ j \in J (x ^{\ast}) . }$ Hence

$${ \mu _j ^{\ast} g _j (x ^{\ast}) = 0 \quad \text{ for all } j \in [m] . }$$

Note that

$${ Df(x ^{\ast}) + (\lambda ^{\ast}) ^{T} DH(x ^{\ast}) + (\mu ^{\ast}) ^T DG(x ^{\ast}) = 0 . }$$

Hence it suffices to show

$${ \text{To show: } \quad \mu ^{\ast} \succeq 0 . }$$

Let ${ k \in J(x ^{\ast}) . }$ It suffices to show

$${ \text{To show: } \quad \mu ^{\ast} _k \geq 0 . }$$

Suppose to the contrary ${ \mu ^{\ast} _k < 0 . }$ Consider ${ \widetilde{\mathscr{F}}(x ^{\ast}) _k , }$ the enlargement of ${ \widetilde{\mathscr{F}}(x ^{\ast}) }$ on removing the ${ g _k = 0 }$ constraint.

Note that the normal space

$${ T _{x ^{\ast}} \widetilde{\mathscr{F}}(x ^{\ast}) _k ^{\perp} = \text{span}\left( (\nabla h _i (x ^{\ast})) _{i=1} ^{\ell}, (\nabla g _j (x ^{\ast})) _{j \in J(x ^{\ast}) \setminus \lbrace k \rbrace } \right) . }$$

Hence

$${ \nabla g _k (x ^{\ast}) \not\in T _{x ^{\ast}} \widetilde{\mathscr{F}}(x ^{\ast}) _k ^{\perp} . }$$

Hence there is a direction ${ v }$ such that

$${ v \in T _{x ^{\ast}} \widetilde{\mathscr{F}}(x ^{\ast}) _k , \quad \langle \nabla g _k (x ^{\ast}), v \rangle < 0 . }$$

Note that

$${ {\begin{aligned} &\, \langle \nabla f (x ^{\ast}), v \rangle \\ = &\, - \sum _{i=1} ^{\ell} \lambda _i ^{\ast} \langle \nabla h _i (x ^{\ast}) , v \rangle - \sum _{j = 1} ^{m} \mu _j ^{\ast} \langle \nabla g _j (x ^{\ast}), v \rangle \\ = &\, - \mu _k ^{\ast} \langle \nabla g _k (x ^{\ast}), v \rangle . \end{aligned}} }$$

Hence the direction ${ v }$ satisfies

$${ v \in T _{x ^{\ast}} \widetilde{\mathscr{F}}(x ^{\ast}) _k , \quad \langle \nabla g _k (x ^{\ast}), v \rangle < 0, \quad \langle \nabla f (x ^{\ast}), v \rangle < 0 . }$$

Hence, if we perturb ${ x ^{\ast} }$ in the direction ${ v , }$ we will approximately stay in ${ \widetilde{\mathscr{F}}(x ^{\ast}) _k \cap \lbrace g _k < 0 \rbrace }$ and ${ f }$ decreases.

Hence, if we perturb ${ x ^{\ast} }$ in the direction ${ v , }$ we will approximately stay in ${ \mathscr{F} }$ and ${ f }$ decreases. A contradiction.

Hence ${ \mu _k ^{\ast} \geq 0, }$ as needed. ${ \blacksquare }$