SUMMARY:Given an (ordered) basis we can create the Gram matrix $G$ of inner products of basis vectors. An orthonormal basis is given as the columns of a square matrix $W$ such that $W^T GW = I.$ That is, the coefficients (in the original basis) of an orthonormal basis are the columns of $W.$

ORIGINAL: Given a symmetric matrix $H,$ there are methods for finding an invertible matrix $P$ such that $P^T HP = D$ is diagonal. In your case, the matrix is the Gram matrix of inner products of basis vectors.

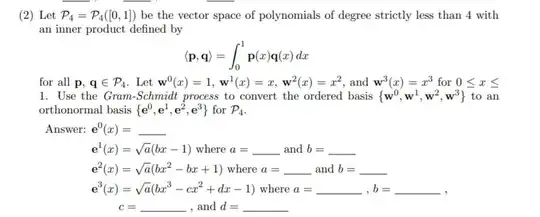

$$

\left(

\begin{array}{rrrr}

1 & \frac{1}{2} & \frac{1}{3} & \frac{1}{4} \\

\frac{1}{2} & \frac{1}{3} & \frac{1}{4} & \frac{1}{5} \\

\frac{1}{3} & \frac{1}{4} & \frac{1}{5} & \frac{1}{6} \\

\frac{1}{4} & \frac{1}{5} & \frac{1}{6} & \frac{1}{7} \\

\end{array}

\right)

$$

This is Hilbert's matrix, or at least a square upper left corner of the infinite matrix, and constructed by precisely Hilbert's manner. https://en.wikipedia.org/wiki/Hilbert_matrix

I multiplied by $420$ to get a matrix of integers, then went through the method I asked about at reference for linear algebra books that teach reverse Hermite method for symmetric matrices

$$ P^T H P = D $$

$$\left(

\begin{array}{rrrr}

1 & 0 & 0 & 0 \\

- \frac{ 1 }{ 2 } & 1 & 0 & 0 \\

\frac{ 1 }{ 6 } & - 1 & 1 & 0 \\

- \frac{ 1 }{ 20 } & \frac{ 3 }{ 5 } & - \frac{ 3 }{ 2 } & 1 \\

\end{array}

\right)

\left(

\begin{array}{rrrr}

420 & 210 & 140 & 105 \\

210 & 140 & 105 & 84 \\

140 & 105 & 84 & 70 \\

105 & 84 & 70 & 60 \\

\end{array}

\right)

\left(

\begin{array}{rrrr}

1 & - \frac{ 1 }{ 2 } & \frac{ 1 }{ 6 } & - \frac{ 1 }{ 20 } \\

0 & 1 & - 1 & \frac{ 3 }{ 5 } \\

0 & 0 & 1 & - \frac{ 3 }{ 2 } \\

0 & 0 & 0 & 1 \\

\end{array}

\right)

= \left(

\begin{array}{rrrr}

420 & 0 & 0 & 0 \\

0 & 35 & 0 & 0 \\

0 & 0 & \frac{ 7 }{ 3 } & 0 \\

0 & 0 & 0 & \frac{ 3 }{ 20 } \\

\end{array}

\right)

$$

When we divide back again by the same 420, we find

$$\left(

\begin{array}{rrrr}

1 & 0 & 0 & 0 \\

- \frac{ 1 }{ 2 } & 1 & 0 & 0 \\

\frac{ 1 }{ 6 } & - 1 & 1 & 0 \\

- \frac{ 1 }{ 20 } & \frac{ 3 }{ 5 } & - \frac{ 3 }{ 2 } & 1 \\

\end{array}

\right)

\left(

\begin{array}{rrrr}

1 & \frac{1}{2} & \frac{1}{3} & \frac{1}{4} \\

\frac{1}{2} & \frac{1}{3} & \frac{1}{4} & \frac{1}{5} \\

\frac{1}{3} & \frac{1}{4} & \frac{1}{5} & \frac{1}{6} \\

\frac{1}{4} & \frac{1}{5} & \frac{1}{6} & \frac{1}{7} \\

\end{array}

\right)

\left(

\begin{array}{rrrr}

1 & - \frac{ 1 }{ 2 } & \frac{ 1 }{ 6 } & - \frac{ 1 }{ 20 } \\

0 & 1 & - 1 & \frac{ 3 }{ 5 } \\

0 & 0 & 1 & - \frac{ 3 }{ 2 } \\

0 & 0 & 0 & 1 \\

\end{array}

\right)

= \left(

\begin{array}{rrrr}

1 & 0 & 0 & 0 \\

0 & \frac{ 1 }{ 12 } & 0 & 0 \\

0 & 0 & \frac{ 1 }{ 180 } & 0 \\

0 & 0 & 0 & \frac{ 1 }{ 2800 } \\

\end{array}

\right)

$$

To get the identity matrix, we now multiply on the far left and far right by diagonal matrix

$$

\left(

\begin{array}{rrrr}

1 & 0 & 0 & 0 \\

0 & 2 \sqrt 3 & 0 & 0 \\

0 & 0 & 6 \sqrt 5 & 0 \\

0 & 0 & 0 & 20 \sqrt 7 \\

\end{array}

\right)

$$

Finally, the desired orthonormal basis are the COLUMNS of

$$

\left(

\begin{array}{rrrr}

1 & - \frac{ 1 }{ 2 } & \frac{ 1 }{ 6 } & - \frac{ 1 }{ 20 } \\

0 & 1 & - 1 & \frac{ 3 }{ 5 } \\

0 & 0 & 1 & - \frac{ 3 }{ 2 } \\

0 & 0 & 0 & 1 \\

\end{array}

\right)

\left(

\begin{array}{rrrr}

1 & 0 & 0 & 0 \\

0 & 2 \sqrt 3 & 0 & 0 \\

0 & 0 & 6 \sqrt 5 & 0 \\

0 & 0 & 0 & 20 \sqrt 7 \\

\end{array}

\right)

$$

as coefficients for the original ordered basis $(1,x,x^2, x^3).$

These give

$$ \color{red}{ 1,} \; \;

\color{blue}{

\sqrt 3 \cdot (2x-1) ,} \; \;

\color{green}{

\sqrt 5 \cdot (6 x^2 -6x+1),} \; \;

\color{magenta}{

\sqrt 7 \cdot (20 x^3 - 30 x^2 + 12 x -1)} $$