I have recently come across this problem from a friend I help with stats occasionally. This however stumped me completely. I have looked online on basically every single website you can find but what I did find either I did not fully understand or I didn't fully understand well enough to explain to my friend. In my last resort I have made an account here hoping for some help.

From what I have read and understood I know the Bayes boundary is a kind of squiggly line you find which separates between classifying an observation on either end. However I don't understand how one comes to finding how to get one. Furthermore, bayesian stats is very new to me so I am struggling and therefore my friend is too. Thank you for reading and I hope someone who understands this well can explain it to me in a simple concise and easy way

Consider a binary classification problem $Y \in \{0, 1\}$ with one predictor $X$.

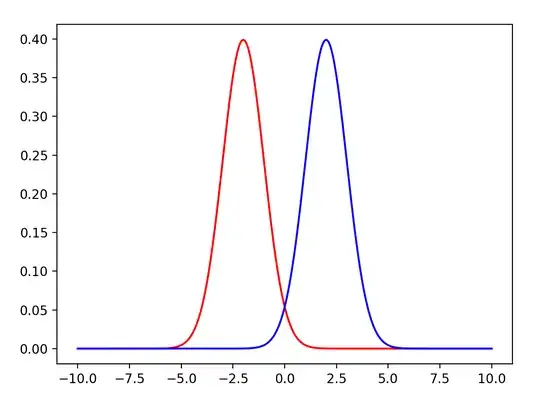

The prior probability of being in class 0 is $Pr(Y = 0) = \pi_0= 0.69$ and the density

function for $X$ in class 0 is a standard normal

$$f_0(x) = Normal(0, 1) = (1/\sqrt{2\pi})\exp(-0.5x^2).$$

The density function for $X$ in class 1 is also normal, but with $\mu = 1$ and $\sigma^2 = 0.5$, i.e.

$$f_1(x) = Normal(0, 1) = (1/\sqrt{\pi})\exp(-(x-1)^2).$$

(a) Plot $\pi_0f_0(x)$ and $\pi_1f_1(x)$ in the same figure.

(b) Find the Bayes decision boundary.

(c) Using Bayes classifier, classify the observation $X = 3$. Justify your prediction.

(d) What is the probability that an observation with $X = 2$ is in class 1?

Any help at all would be greatly appreciated!