Show that the random variables $X$ and $Y$ are uncorrelated but not independent

The given joint density is

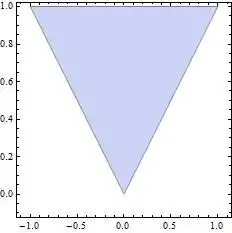

$f(x,y)=1\;\; \text{for } \; -y<x<y \; \text{and } 0<y<1$, otherwise $0$

My main concern here is how should we calculate $f_1(x)$

$f_1(x)=\int_y dy = \int_{-x}^{1}dy + \int_{x}^{1}dy = 1+x +1=2\; \; \forall -1 <x<1$

OR Should we do this?

$f_1(x)$=$$ \begin{cases} \int_{-x}^{1}dy = 1+x && -1<x<0 \\ \int_{x}^{1}dy = 1-x & & 0\leq x <1 \\ \end{cases} $$

In the second case, how do I show they are not independent.

I can directly say that the joint distribution does not have a product space but I want to show that $f(x,y)\neq f_1(x)f_2(y)$

Also, for anyone requiring further calculations,

$f_2(y) = \int dx = \int_{-y}^{y}dx = 2y$

$\mu_2= \int y f_2(y)dy = \int_{0}^{1}2y^2 = \frac23$

$\sigma_2 ^2 = \int y^2f_2(y)dy - (\frac23) ^2 = \frac12 - \frac49 = \frac1{18}$

$E(XY)= \int_{y=0}^{y=1}\int_{x=-y}^{x=y} xy f(x,y)dxdy =\int_{y=0}^{y=1}\int_{x=-y}^{x=y} xy dxdy$ which seems to be $0$? I am not sure about this also.