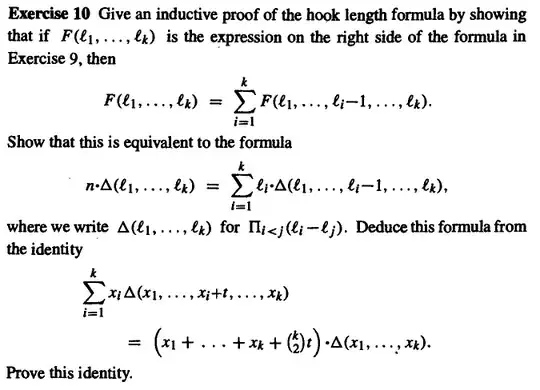

The difference of the RHS and the LHS is a degree $\binom{k}{2}+1$ polynomial in $x_1, x_2, \ldots, x_k, t$.

First, note that if $x_i=x_j$ for any $i, j$, then the equation is true. Indeed, the RHS would be 0, and all terms on the LHS would be 0 other than $x_i\Delta(x_1, x_2, \ldots, x_i+t, \ldots, x_k)$ and $x_j\Delta(x_1, x_2, \ldots, x_j+t, \ldots, x_k)$, which cancel out (all factors not involving $x_i$ and $x_j$ are the same; factors involving only one can be paired up as $(x_i+t-x_k)(x_k-x_j)$ which is the same as $(x_i-x_k)(x_k-(x_j+t))$(recall $x_i=x_j$), and the only factors left are $x_i+t-x_j$ and $x_i-(x_j+t)$, causing one to be the opposite of the other).

Therefore, $x_i-x_j$ is a factor of the difference for any $i, j$, giving $\binom{k}{2}$ factors.

When $t=0$, the equation is true by the distributive law. So $t$ is also a factor of the difference of the LHS and the RHS.

Therefore, the difference LHS-RHS must be of the form $Ct\prod_{i<j}{(x_i-x_j)}$ for some constant $C$. To prove that $C=0$, it sufficies to show that the equation is true for some values for $x_1,x_2,\ldots,x_k,t$ where no two $x_i$ are equal and $t\neq0$.

I will choose $x_i=i$ and $t=-1$.

$$\textrm{LHS}=\sum_{i=1}^{k}i\Delta(1,\ldots, i-1,i-1,i+1,\ldots,k),$$

and all terms are 0 except the first, which is $\Delta(0,2,\ldots,k).$

$$\textrm{RHS}=\left(1+2+\ldots+k+\binom{k}{2}(-1)\right)\cdot\Delta(1,2,\ldots,k)=k\Delta(1,2,\ldots,k).$$

So, the goal is to show

$$\Delta(0,2,\ldots,k)=k\Delta(1,2,\ldots,k).$$

To do this, note that all the factors in the $\Delta$ part not involving the first term are the same in both. For the LHS, the factors involving the first term are $-2,-3,\ldots,-k$, and for the RHS, the factors involving the first term are $-1,-2,\ldots,-(k-1)$, so we are done.