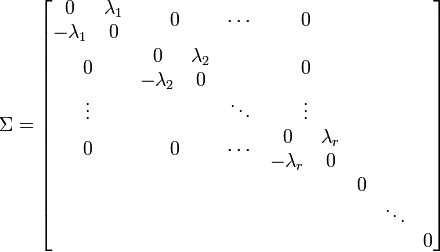

I tried to prove that a real antisymmetric matrix can be taken by an orthogonal tranformation to a form:

where the eigenvalues are $\pm i\lambda_1, \pm i\lambda_2 ... $

which is a statement I saw on wikipedia in http://en.wikipedia.org/wiki/Antisymmetric_matrix

I also know an antisymmetric matrix can be diagonalized by a unitary transformation, and I found a unitary transformation taking the diagonal matrix to the required form.

So by composing the two transformations (diagonalization, then taking the diagonal matrix to the required form), I'll get a unitary transformation taking the real antisymmetric matrix to another real matrix.

My question is if this transformation must be a real matrix? if so I can deduce that the unitary transformation is in fact an orthogonal transformation.

So is this true?

Is a unitary transformation taking a real matrix to another real matrix necessarily an orthogonal transformation?

EDIT: After receiving in the comment here a counterexample, I'm adding:

Alternatively, if it is not necessarily orthogonal, does there necessarily exist an orthogonal transformation taking the two matrices to each other?