I am sorry for the long question! Thanks for taking the time reading the question and for your answers!

Context: Let $B_n\sim\text{Binomial(n,p)}$ be the number of successes in $n$ Bernoulli trials of probability $p\in(0,1)$. Let $$\tilde B_n=\frac{B_n-np}{\sqrt{np(1-p)}}$$ be the standardized random variable and let $N\sim\text{N}(0,1)$ have the standardized normal distribution. Let $\epsilon_n(x)$ be the error between the cumulative distribution function of $\tilde B_n$ and $N$, i.e. $$\epsilon_n(x) = \left|\mathcal P(\tilde B_n \le x)-\mathcal P(N \le x)\right|$$

The central limit Theorem shows, that $\lim_{n\to\infty} \epsilon_n = 0$ (uniform in $x$). By doing numerical calculations I get always the result, that the supremum of $\epsilon_n$ is attained for $x\in[-1,1]$ (see below).

My question: Is there a proof, that the maximal error of $\epsilon_n(x) = \left|\mathcal P(\tilde B_n \le x)-\mathcal P(N \le x)\right|$ is always attained in the interval $x\in[-1;1]$, i.e. that the point $x$ where $\left|\mathcal P(\tilde B_n \le x)-\mathcal P(N \le x)\right|$ is maximal fulfills $-1\le x\le 1$? Is this true?

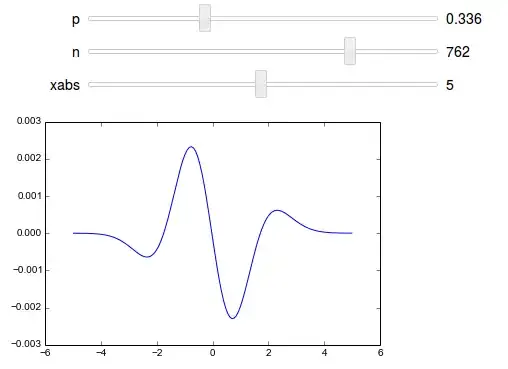

Some diagrams: Here is a plot of $f(x)=\mathcal P(\tilde B_n \le x)-\mathcal P(N \le x)$ for $p=0.336$ and $n=762$:

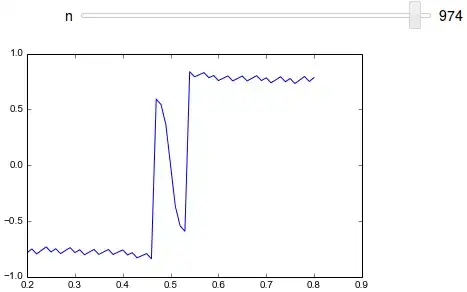

Here is a plot showing the position of the maximal error, i.e the point $x$ where $\epsilon_n(x)$ is maximal. On the x-axis is the value $p\in(0,1)$. The y-axis shows the point $x$ where $\epsilon_n(x)$ is maximal in the calculation:

You can see, that the maximal error is always attained for $-1\le x \le 1$.

Note: I know, that because $\mathcal P(\tilde B_n \le x)$ has steps, the function $\epsilon_n$ is not continuous and thus $\sup_{x\in\mathbb R}\epsilon_n(x)$ may not be attained. But as you can see in the diagram the preimage of a sufficiently small neighborhood of $\sup_{x\in\mathbb R}\epsilon_n(x)$ lies in $[-1;1]$...

This question is also related to my follow up question Normal approximation of tail probability in binomial distribution (which describes my motivations behind this question).