I'm showing you an exercise I have tried and I'm looking for your opinion/critic because this is my first time in kinetic theory and stochastic processes and I wish to know if I'm making right, in other words, can you check it and tell me if everything is fine with my answer?

Greetings!

Consider two stochastic variables $X,\,Y\,$ defined both in the interval $\,[-\infty,+\infty],\,$ and suppose they obey a bi-dimensional gaussian distribution, i.e., $$P_{2}(x,y)=\frac{1}{2\pi\sigma^{2}}e^{-(x^{2}+y^{2})/(2\,\sigma^{2})}$$ Now, let's define $\,s=x^{2}+y^{2}\,$ and $\,\phi\,$ the angle formed by the vector of components $(x,y)$ with respect to the positive semi-axis $\,OX\,$ (i.e., the usual polar angle).

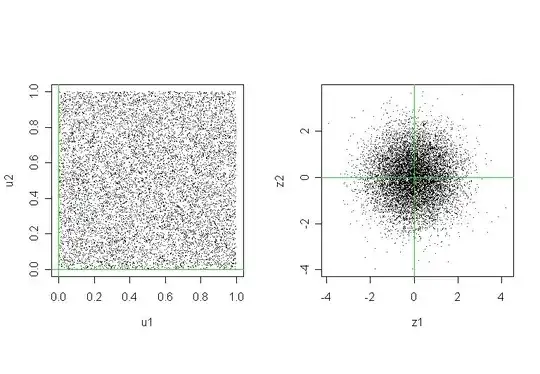

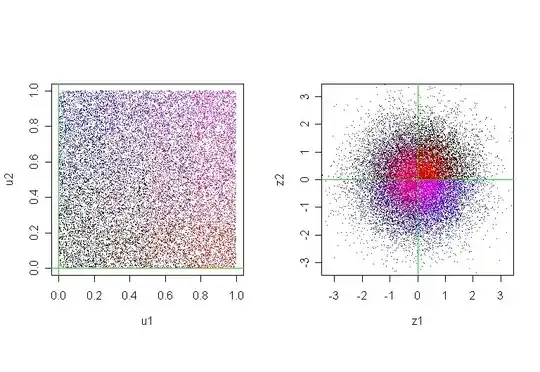

Discuss how can be generated in the computer a bi-dimensional vector which components obey a gaussian distribution from a standard random number generator, that gives numbers uniformly distributed between 0 and 1.

What I have done:

Given a standard random number generator. Let $n_{1}, n_{2}$ be the results of two outputs of this number generator. Define $s$ so that: $$\int_{0}^{s} \frac{e^{-z/2\sigma^{2}}}{2\sigma^{2}}dz=1-e^{-s/2\sigma^{2}}=n_{1}$$ So: $s=2\sigma^{2} ln(|1-n_{1}|).$

$\theta=2\pi n_{2}.$

Let $$x=\sqrt{s}\cos(\theta)$$ $$y=\sqrt{s}\sin(\theta).$$ The points $(x,y)$ so generated have the prescribed distribution.