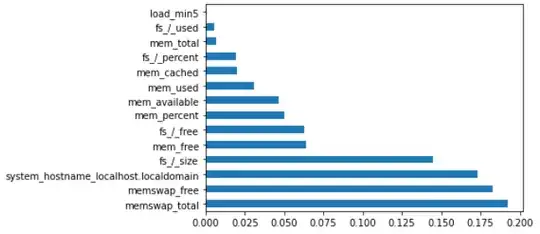

I am building a classification model based on some machine performance data. Unfortunately for me, it seems to over-fit no matter what I change. The dataset is quite large so I'll share the final feature importance & cross validation scores after feature selection.

#preparing the data

X = df.drop('target', axis='columns')

y = df['target']

X_train, X_test, y_train, y_test = train_test_split(X, y, train_size=0.75, random_state=10, stratify=y)

I then cross validate as follows;

logreg=LogisticRegression()

kf=KFold(n_splits=25)

score=cross_val_score(logreg,X,y,cv=kf)

print("Cross Validation Scores: {}".format(score))

print("Average Cross Validation score : {}".format(score.mean()))

Here are the results that I get:

> Cross Validation Scores [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 0.94814175 1. 1. 1. 1. 1. 1.]

> Average Cross Validation score : 0.9979256698357821

When I run RandomForests, the accuracy is 100%. What could be the problem? PS. The classes were imbalanced so I "randomly" under-sampled the majority class.

UPDATE: I overcame this challenge by eliminating some features from the final dataset. I retrained my models using a few features at a time and was able to find out the ones that caused the "over-fitting". In short, better feature selection did the trick.