In my limited exposure, it appears that "successful" machine learning algorithms tend to have very large VC dimension. For example, XGBoost is famous for being used to win the Higgs Boson Kaggle competition, and Deep Learning has made many headlines. Both algorithm paradigms are based on models that can be scaled to shatter any dataset, and can incorporate boosting which increases VC dimension.

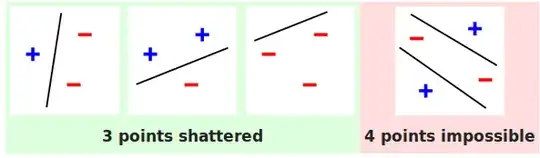

According to VC dimension analysis, a large dimension is supposedly a bad thing, it allows models to overfit or memorize the data instead of generalize. For example, if my model shatters every dataset, e.g. a rectangle around every point, then it cannot be extrapolated outside the dataset. My grid of rectangles tells me nothing about points outside the grid. The larger the VC dimension, the more likely the model will shatter a dataset instead of generalizing, and thus once exposed to new data outside the training dataset, it will perform badly.

Back to the original point, many of the most "successful" machine learning algorithms have this common trend of having a large VC dimension. Yet, according to machine learning theory this is a bad thing.

So, I am left confused with this significant discrepancy between theory and practice. I know the saying "In theory there is no difference between theory and practice, in practice there is" and practitioners tend to brush aside such discrepancies if they get the results they want. A similar question was asked regarding Deep Learning, and the consensus was that it did have large VC dimension, but that doesn't matter because it scores very well on benchmark datasets.

But, it is also said "there is nothing more practical than good theory." Which suggests such a large discrepancy has a significance for practical application.

My question then, is it true that the only thing that really matters is low error scores on test datasets, even when the theoretical analysis of the algorithm says it will generalize poorly? Is overfitting and memorizing instead of generalizing not that big of a deal in practice if we have hundreds of billions of samples? Is there a known reason why the theory does not matter in practice? What is the point of the theory, then?

Or, are there important cases where a very large VC dimension can come back to bite me, even if my model has great scores? In what real world scenario is low error and large VCD a bad thing, even with hundreds of billions of samples in the training data?