I'm trying to understand the nature of true randomness. I'm building an RNG using a radioactive source. Basically, I'm measuring the time between consecutive decays which in theory should be unpredictable. The distribution of these time measurements shows a Gaussian-like distribution as I predicted. Now my understanding of true randomness requires a uniform distribution of variables, which is all variables are equally likely. How can I transform a Gaussian Distribution to uniform distribution and is it really necessary to have uniformly distributed random bits to have a good generator?

5 Answers

You can just follow the instructions on HotBits, which is exactly what you're trying to build. It's been running for years and is the only radioactive TRNG on the internet. It goes into great depth regarding underlying nuclear physics, sample distributions and extractor mechanism.

Although not really within the realms of strict cryptography, there is also a good counting analysis here from Caltech. In a nutshell, at low count rates the click rate follows a Poisson distribution. But as the mean click rate $(\mu$) increases, it morphs into a nice symmetrical Gaussian shape which is typical behaviour of Poisson if $\mu > \approx 20$.

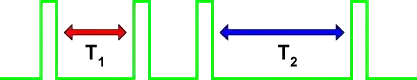

If you can get a good tube and a decent/safe source, it'll be pretty simple if you follow the instructions. Don't worry about the tick distribution. Simply detect the ticks and follow the de-biasing procedure in the sections featuring this:-

It's a reversing von Neumann extractor. It's written up so I won't repeat the details. If tube dead times and saturation levels are met (so that there is no autocorrelation), you'll get uniformly distributed random bits popping out of the de-biasing algorithm. So that we can see HotBits' quality, I downloaded their 91 Mbit data set and ran ent over it:-

Entropy = 1.000000 bits per bit.

Optimum compression would reduce the size

of this 91750400 bit file by 0 percent.

Chi square distribution for 91750400 samples is 0.05, and randomly

would exceed this value 81.93 percent of the times.

Arithmetic mean value of data bits is 0.5000 (0.5 = random).

Monte Carlo value for Pi is 3.141486168 (error 0.00 percent).

Serial correlation coefficient is -0.000773 (totally uncorrelated = 0.0).

They have other more stringent testing on their site, but it's a clean pass at this level. Chi square also answers your "is it really necessary to have uniformly distributed random bits to have good generator?" mini question. Yes, they do have to be uniformly random for cryptographic use.

So do what HotBits does.

Please note this though:-

the increased sensitivity of the RM-80 and its immunity from saturation increased the rate of HotBits generation from about 30 bytes per second to more than 100.

It's not fast. And there's all that high voltage stuff.

- 15,905

- 2

- 32

- 83

Radioactive decay is normally measured by an exponential process assuming environment parameters are constant. This means that the average time between decays assuming stationarity is an exponential random variable, with $$ Pr[T_i>t]=\exp(-\lambda t) $$ for a constant $\lambda.$

If you know $\lambda$ you can divide time into intervals of length $T_0$ such that $$\exp(-\lambda T_0)=1/2$$ and output a one if there is a decay zero otherwise.

There are many reasons this is probably too simplistic, plus the issue of the environmental interference (natural or malicious) with the source statistics.

So just treat $\lambda$ as unknown pick some $T_0$ by observing the source decay rate, and apply an unbiasing algorithm and then pass blocks of long enough output strings through a secure hash function to be sure.

If the source decay rate changes significantly you can adjust $T_0.$

- 25,146

- 2

- 30

- 63

Radio active decay is a Poisson process. I'm a bit puzzled how you got a Gaussian-like distribution of the time between decays, since the time should have an exponential distribution.

In general, if you have a random variable X with a known distribution having a distribution function F, you get a uniform random variable Y in [0,1] by applying the distribution function to the random variable. I.e. Y = F(X).

The distribution function of an exponential distribution is $ F(t) = 1 -e^{-\lambda t} $. So you should be able to get a uniform distribution by plugging the time between decays t into that. The parameter $\lambda$ is the average number of decays in unit time, which you can measure, e.g. by counting the number of decays in one hour (then the unit of time for t is also one hour).

If the OP calculated the average of multiple delays, it will indeed tend towards a Normal (Gaussian) distribution due to the central limit theorem.

- 131

- 3

Any distribution can be transformed to a uniform distribution by simply taking the cumulative distribution. That is, if for each measurement, you calculate the cumulative distribution up the the value that you observed, then the results of those calculations will, for a continuous distribution, be uniformly distributed in the interval the unit interval.

- 181

- 2

Use a Zenner diode as noise generator, take sample of the noise voltage on the diode (through a capacitor), amplify it and measure the voltage with a ADC. After this process, extract the square root of this value and keep only the mantisa (numeric part after decimal point). It should deliver absolute true random numbers.

- 99