I am trying to use a machine learning model to find a good initial mapping for a quantum circuit. I am trying to minimise the number of swap operations i.e. the number of CNOT gates. This is my methodology -

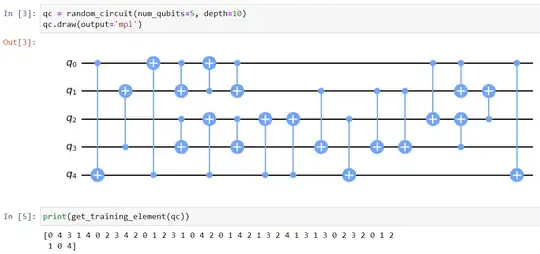

Generate a random quantum circuit with only CNOT gates. I generated a 5 qubit circuit and specified a random depth between 8 and 16 (No particular reason for picking these depths).

Extract the feature/x array for the quantum circuit by using the bits that each CNOT gate works on. For example, for the given circuit, the x array would look like this (Read in pairs) -

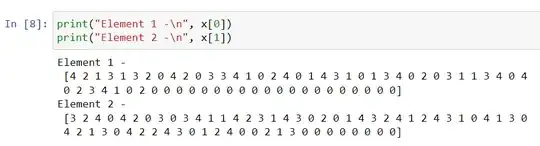

As a circuit with depth 5 will have lesser numbers than a circuit with depth 11, I padded all elements with 0s at the end with a maximum array length of 64. A few training elements would look like this -

Use the Qiskit transpiler to find the CNOT gate count of each possible initial mapping. As there are 5 qubits, there will be 5! = 120 different initial mappings possible. I then ran the qiskit.transpile function in a loop 120 times for each different initial mapping and found the CNOT gate count for each mapping using the transpiled_circuit.count_ops() function.

I thought of using a range of values as acceptable. So, anything between the minimum CNOT gate count and one standard deviation ahead of the minimum would be considered as acceptable. Among all the 120 different transpiled circuits with difference initial mappings, whichever layouts gave me a CNOT gate count within this range would be considered

Found all the acceptable layouts and encoded them in an array of size 120. For example, if layouts 0, 1, 2 and 119 were deemed to be acceptable, the encoded array would look like

The x array will be my training data and the encoded array will be my target data.

I repeated the above steps in a loop to generate a dataset of size 80000 i.e., eighty thousand random circuits of 5 qubits with a depth between 4 and 16 and their acceptable initial mappings as determined by the CNOT gate count by the Qiskit transpiler.

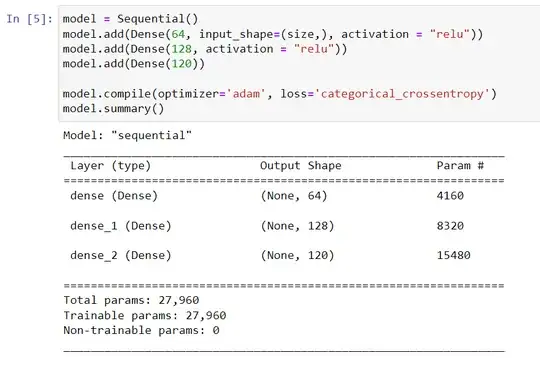

I normalise the x data and feed it to a dense neural network with the following architecture -

However, the network is not learning anything and the accuracy does not go above 1-2%. What am I doing wrong? Is this an error in my overall methodology or is this an error just in the ML model? Is there any way to fix this?