This is just an expansion on the previous two answers.

Using Newton's method

As Claude points out, the solution to $c_1^d+\ldots+c_n^d=1$ may be estimated numerically by using Newton's method to find a root of the function

$$

f(d)=\left(\sum _{i=1}^n c_i^d \right)-1.

$$

In fact, it may be proved that this works using any positive initial starting value $d_0$.

To see this, first note that for any $c\in(0,1)$, the function $g(d)=c^d$ is continuous and strictly decreasing with $g(0)=1$ and

$$\lim_{d\rightarrow\infty}g(d)=0.$$

It follows that $f$ is continuous and monotone decreasing with $f(0)=n>0$ and

$$\lim_{d\rightarrow\infty}f(d)=-1.$$

By the intermediate value theorem, $f$ has a positive root. That root is unique since $f$ is strictly decreasing.

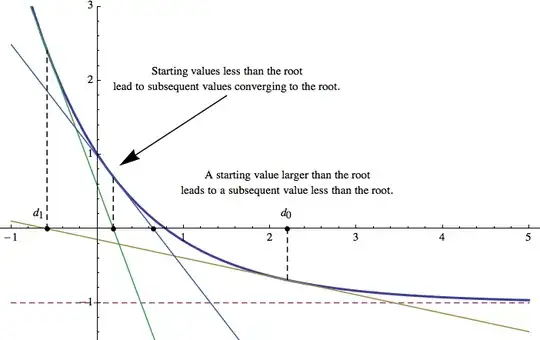

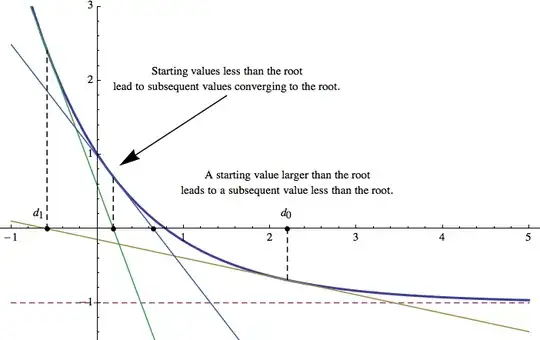

Finally, $g(d)$ (and, therefore, $f(d)$) has a positive second derivative. It is therefore convex (often called concave up in calculus). Under these conditions it can be proved that Newton's method will converge to the unique root. This is fairly easy to see, if you understand the "follow the tangent" approach to Newton's method and I've tried to illustrate this in the picture below. It's also proved on page four of this paper.

Analytic solution

As the other answer points out, the equation is easy to solve in the case that $c_1=\dots=c_n=c$. I disagree with the statement that, "This is by far the most common case in practice," however. Perhaps the case $c_1=\dots=c_n=c$ occurs often in illustrative examples because it is the easiest case to solve and because of it's clear connection with box-counting dimension. The whole point of the formula $c_1^d+\ldots+c_n^d=1$, however, is that there are plenty of examples that do not fit this scheme. I suppose that one could argue similarly that self-similar sets are not typical of fractals in general but, they appear quite common because we understand them.

At any rate, we can generally find some sort of analytic solution of $c_1^d+\ldots+c_n^d=1$ precisely when the $c_i$s are exponentially commensurable, i.e. they can all be expressed as common integer powers of the same base. As a simple example, consider

$$\left(\frac{1}{2}\right)^d + \left(\frac{1}{4}\right)^d = 1.$$

This equation might represent the fractal dimension of a Cantor type set obtained by replacing an interval with two pieces, one scaled by the factor $1/2$ and the other by the fact $1/4$, and then iterating that procedure. Now, since $4=2^2$, the left hand side can be rewritten as

$$\left(\frac{1}{2}\right)^d + \left(\frac{1}{4}\right)^d =

\left(\frac{1}{2}\right)^d + \left(\frac{1}{2}\right)^{2d} =

\left(\frac{1}{2}\right)^d + \left(\left(\frac{1}{2}\right)^{d}\right)^2.$$

Thus, the equation can be rewritten

$$\left(\frac{1}{2}\right)^d + \left(\left(\frac{1}{2}\right)^{d}\right)^2=1.$$

Substituting $q=(1/2)^d$, we get the quadratic $q^2+q-1=0$ which has the unique positive solution $q=\left(\sqrt{5}-1\right)/2.$ The solution to the original equation is, therefore,

$$d=\frac{\log\left(\frac{1}{2}\left(\sqrt{5}-1\right)\right)}{\log(1/2)}.$$

A somewhat trickier example is provided by the example

$$\left(\frac{1}{8}\right)^d + \left(\frac{1}{4}\right)^d = 1.$$

This leads to the cubic $q^3+q^2-1=0$. The solution is therefore

$$d=\frac{\log(\lambda)}{\log(1/2)}\approx 0.405685,$$

where

$$\lambda = \frac{1}{3} \left(-1+\sqrt[3]{\frac{25}{2}-\frac{3

\sqrt{69}}{2}}+\sqrt[3]{\frac{1}{2} \left(25+3

\sqrt{69}\right)}\right) \approx 0.75487$$

is the largest root of $q^3+q^2-1=0$. Note that we have an analytic expression in terms of the root of a polynomial. In this case, $\lambda$ can be be expressed in terms of roots while in other cases, it might not be.