Ok I think I get it.

The Taylor formula is :

$f(x_{i+1}) = f(x_i) + f'(x_i)(x_{i+1} - x_i)$

So, if we're looking for a zero we can put :

$0 = f(x_i) + f'(x_i)(x_{i+1} - x_i)$

$0 = \frac{f(x_i)}{f'(x_i)} + x_{i+1} - x_i$

$x_{i+1} = x_i - \frac{f(x_i)}{f'(x_i)}$

And at the end, we get the Newton-Raphson formula.

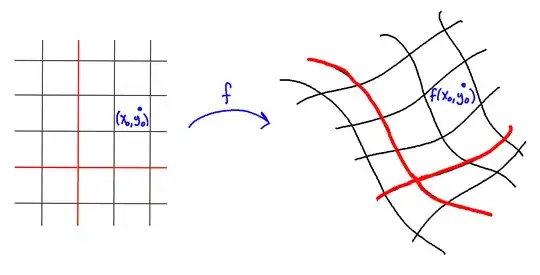

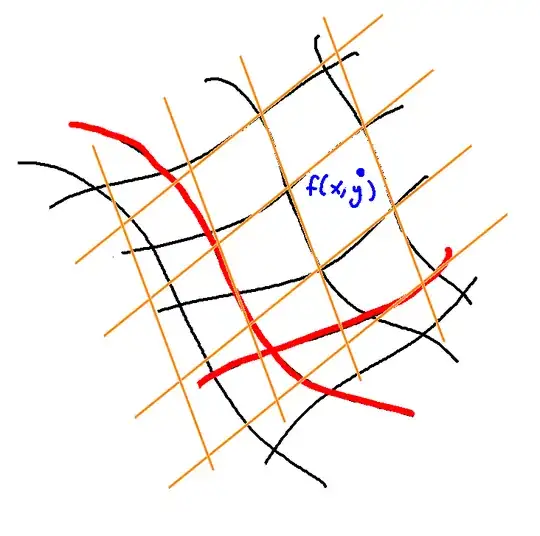

If we do the same in 2 dimensions :

$\begin{bmatrix} f_1(x_{i+1}) \\ f_2(y_{i+1}) \end{bmatrix} = \begin{bmatrix} f_1(x_i) \\ f_2(y_i) \end{bmatrix} + \begin{bmatrix} \frac{\partial f_1}{\partial x} \frac{\partial f_1}{\partial y} \\ \frac{\partial f_2}{\partial x} \frac{\partial f_2}{\partial y}\end{bmatrix} \begin{bmatrix} x_{i+1} - x_i \\ y_{i+1} - y_i \end{bmatrix}$

which is equivalent to :

$F(x_{i+1}) = F(x_i) + J_F(x_i)(x_{i+1} - x_i)$

and if we develop we get this :

$\begin{bmatrix} f_1(x_{i+1}) \\ f_2(y_{i+1}) \end{bmatrix} = \begin{bmatrix} f_1(x_i) \\ f_2(y_i) \end{bmatrix} + \begin{bmatrix} \frac{\partial f_1}{\partial x}(x_{i+1} - x_i) + \frac{\partial f_1}{\partial y}(y_{i+1} - y_i) \\ \frac{\partial f_2}{\partial x}(x_{i+1} - x_i) + \frac{\partial f_2}{\partial y}(y_{i+1} - y_i) \end{bmatrix}$

$\begin{bmatrix} f_1(x_{i+1}) \\ f_2(y_{i+1}) \end{bmatrix} = \begin{bmatrix} f_1(x_i) + \frac{\partial f_1}{\partial x}(x_{i+1} - x_i) + \frac{\partial f_1}{\partial y}(y_{i+1} - y_i) \\ f_2(y_i) + \frac{\partial f_2}{\partial x}(x_{i+1} - x_i) + \frac{\partial f_2}{\partial y}(y_{i+1} - y_i) \end{bmatrix}$

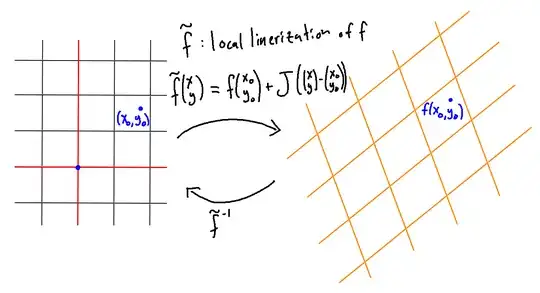

We can see the Taylor formula again in 2 dimensions here. So if we want to get the Newton-Raphson formula, we just have to put this :

$F(x_{i+1}) = F(x_i) + J_F(x_i)(x_{i+1} - x_i)$

$0 = F(x_i) + J_F(x_i)(x_{i+1} - x_i)$

$0 = J_F^{-1}(x_i)F(x_i) + x_{i+1} - x_i$

$x_{i+1} = x_i - J_F^{-1}(x_i)F(x_i)$

and finally we get this mysterious formula.

(tbh I'm not super sure of my demonstration but I think it's a bit clearer for me now even so)