I am trying to show that

$$\int_0^{\infty} \frac{\log(x)^2}{1+x^2}dx = \pi^3/8$$

by integrating over a certain contour, but am having some trouble. I first holomorphically extend $\log$ to the region $\{ z \in \mathbb C: z \neq ix \operatorname{ for some } x \leq 0\}$ by setting

$$\log(re^{i \theta}) = \ln r + i \theta : - \frac{\pi}{2} < \theta < \frac{3 \pi}{2}\}$$

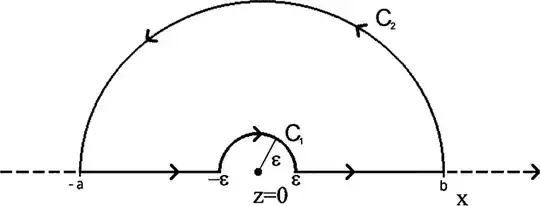

and then I integrate $\log(z)^2/(1+z^2)$ over the region

letting $\epsilon$ go to $0$ and $a, b$ go to $\infty$. The only singularity of $\log(z)^2/(1+z^2) = \log(z)^2/\Big((z+i)(z-i)\Big)$ inside this region is at $z = i$, for which the residue is

$$\frac{\log(i)^2}{2i} = \frac{(\pi i/2)^2}{2i} = -\frac{\pi^2}{8i}$$

Assuming the circular paths go to $0$ (which I haven't proven yet), the residue theorem then implies that

$$2 \int\limits_0^{\infty} \frac{\log(x)^2}{1+x^2} dx = 2 \pi i \Big(- \frac{\pi^2}{8i}\Big) = - \frac{\pi^3}{4}$$

or

$$\int_0^{\infty} \frac{\log(x)^2}{1+x^2} dx = - \frac{\pi^3}{8}.$$

I don't know why this minus sign appeared. Where am I going wrong?