Consider two non-constant real polynomials $f(x)$ and $g(x)$:

$$f=f_0 + f_1 (x-x_0) +...+f_N(x-x_0)^N $$

$$g=g_0 + g_1(x-x_1) +...+g_M(x-x_1)^M $$

where $f_0...f_N,g_0...g_M,x,x_0,x_1 \in \mathbb{R}$ and $M,N\in \mathbb{N}^+$.

I would like to know if there is any function $H(f,g)$ such that:

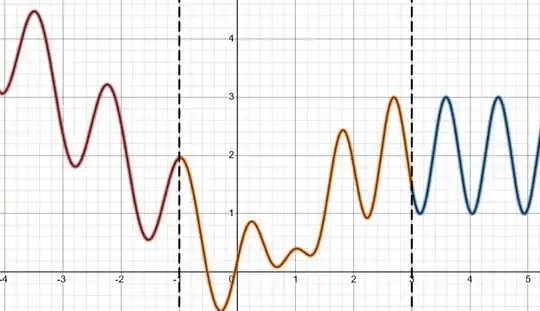

the Taylor expansion of $h(x)=H(f(x),g(x))$ up to order $N$ around $x_0$ coincides with $f$.

the Taylor expansion of $h(x)$ up to order $M$ around $x_1$ coincides with $g$.

I was thinking about using a sort of harmonic mean of $f$ and $g$ or transition functions built out of $f$ and $g$, but I am stuck. Moreover, I am not even sure that such a function exists if we restrict $H$ to be a rational function of two variables. For sure different strategies are possible, if you have any idea please tell me. Thank you in advance for any help/hint/idea!

Note: I am asking the function $h(x)$ to stem from $H(f,g)$, not $H(f,g,x)$. Therefore, a "trivial" construction like $\tilde{h}(x)= \phi_0(x)f(x)+\phi_1(x)g(x)$ for some appropriate $\phi_0(x)$ and $\phi_1(x)$ is not admissible. In other words, since it is required that $h(x)=H(f(x),g(x))$, we also have that $$ h'(x) = f'(x) \partial_f H + g'(x) \partial_g H \, . $$ The above property is not respected by the "trivial" $\tilde{h}(x)$, as $\tilde{h}'(x)$ is not linear in the derivatives $f'$ and $g'$.