It is possible to represent the sum of two geometric Brownian paths as another geometric Brownian motion?

Intro_________________

I am trying to understand how it would behave a weighted composition of two arbitrarily chosen geometric Brownian paths, let say: $$S_1(t) = S_{01}e^{(\mu_1-\sigma_1^2/2)t+\sigma_1 W_{1t}}$$ $$S_2(t) = S_{02}e^{(\mu_2-\sigma_2^2/2)t+\sigma_2 W_{2t}}$$ where $W_{1t}$ and $W_{2t}$ are standard Brownian motions $\sim N(0,\ t)$, not necessarily independent, so $\rho_{12} = \frac{\text{COV}[\sigma_1 W_{1t},\ \sigma_2 W_{2t}]}{\sigma_1 \sigma_2}$. Now, considering weights $0 \leq a_i \leq 1$ such as $\sum_i a_i = 1$, I want to know if is possible to approximate the process: $$S_3(t) = a_1 S_1(t)+a_2 S_2(t)$$ as it were built as: $$S_3(t) = S_{03}e^{(\mu_3-\sigma_3^2/2)t+\sigma_3 W_{3t}}$$ which looks like it is a classic approximation for the sum of Log-Normal variables.

For trying to find the parameters, I am trying to find the expected value and the variance of $S_3(t)$ since I could estimate from there the approximate parameters $\mu_3$, $\sigma_3$ and some random process $W_{3t}$, but I got stuck on splitting the logarithm sum. I am aiming to find these parameters such later I can add other terms by extension as $\ln(S_5) = \ln(S_4+S_3)$ an so on.

What I have done so far_________________

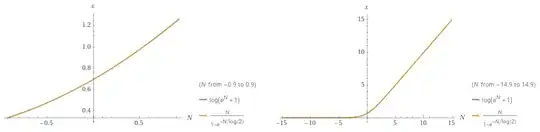

I started by trying the following: since I could represent $$S_3(t) = S_{03}e^{(\mu_3-\sigma_3^2/2)t+\sigma_3 W_{3t}} = e^{\ln(S_{03})+(\mu_3-\sigma_3^2/2)t+\sigma_3 W_{3t}} = e^{Z}$$ $$a_1 S_1(t) = a_1 S_{01}e^{(\mu_1-\sigma_1^2/2)t+\sigma_1 W_{1t}} = e^{\ln(a_1 S_{01})+(\mu_1-\sigma_1^2/2)t+\sigma_1 W_{1t}} = e^{X}$$ $$a_2 S_2(t) = a_2 S_{02}e^{(\mu_2-\sigma_2^2/2)t+\sigma_2 W_{2t}} = e^{\ln(a_2 S_{02})+(\mu_2-\sigma_2^2/2)t+\sigma_2 W_{2t}} = e^{Y}$$ and I am trying to find $E[\ln(e^Z)] = E[Z]$ and $\text{Var}[\ln(e^Z)] = \text{Var}[Z]$, I need to figure out a way to split: $$Z = \ln(e^X+e^Y)$$ since I don't know how to apply this operators to the logarithm of a sum. At first I tried to split the logarithm of the sum by factorization: $$Z=\ln(e^X+e^Y) = \ln(e^X(1+e^{Y-X}))=\ln(e^X)+\ln(1+e^{Y-X})= X+\ln(1+e^{Y-X})$$ so I tried to study how $\ln(1+e^N)$ behaves, and as could be seen in plots in Wolfram-Alpha the function fastly behaves as $\ln(1+e^N)\approx N$, son for large $N$, or equivalently $Y \gg X$, I will have that: $$Z = X+\ln(1+e^{Y-X}) \approx X+Y-X = Y$$ which tells me that the term who dominates the sum will saturate the logarithm drowning within him the other variable as it were a slightly noise in the main trend (which is an interesting insight thinking both are terms that drift from each other "exponentially fast" as time passes).

But for gaining insight of how represent the combination of both terms at the beginning is not much helpful at all. But the plot from $\ln(1+e^N)$ resembles the comments of this another question, so using something similar to a Swish function or to integrating a Sigmoid function, I found that this function could be approximated quite close by: $$\ln(1+e^N) \approx \frac{N}{1-e^{-\frac{N}{\ln(2)}}}$$

I don't know How good it actually is, I am asking that in other question, but I think is good enough from the plot:

Later I found on Wikipedia that the function is called Softplus function, and is somehow related to the LogSumExp function so also to the Partition function.

Using this approximation, I will have that I can split: $$Z = \ln(e^X+e^Y) \approx f(X,Y) = \begin{cases} X+\frac{Y-X}{1-e^{-\frac{(Y-X)}{\ln(2)}}},\quad X \neq Y \\ X+\ln(2), \quad X\equiv Y \end{cases}$$

Ignoring the case $X\equiv Y$ I could represent also: $$f(X,Y) = \frac{Xe^{\frac{X}{\ln(2)}}-Ye^{\frac{Y}{\ln(2)}}}{e^{\frac{X}{\ln(2)}}-e^{\frac{Y}{\ln(2)}}} = X \left[\frac{e^{\frac{X}{\ln(2)}}}{e^{\frac{X}{\ln(2)}}-e^{\frac{Y}{\ln(2)}}}\right]+Y\left[\frac{e^{\frac{Y}{\ln(2)}}}{e^{\frac{Y}{\ln(2)}}-e^{\frac{X}{\ln(2)}}}\right]$$

so for values were $X$ have the same order of $Y$ the combination is driven by highly non-linear coefficients, and here is were I got stuck.

Does somebody have an idea of how to get a decent approximation of $E[f(X,Y)]$ and $\text{Var}[f(X,Y)]$???

1st Added later

I realized later, that since if one random variable take advantage the sum got saturated $\ln(e^X+e^Y)\overset{Y\gg X}{\approx} Y$, I will only need to focus when they are comparable in size, so I tried to use the Taylor expansion: $$\ln(1+e^x) \approx \ln(2)+\frac{x}{2}+\frac{x^2}{8}+\mathbb{O}(x^4)$$

which leads to splitting the sum approximately as: $$\begin{array}{r c l} Z = X+\ln(1+e^{Y-X}) & \approx & X+\ln(2)+\frac{Y-X}{2}+\frac{(Y-X)^2}{8} \\ & = & \underbrace{\ln(2)+\frac{Y+X}{2}}_{\text{1st order approx.}}+\frac{(Y-X)^2}{8} \end{array}$$

So far I was been able to perform a really interesting insight from the $1st$ order approximation, but I think the $2nd$ order term is required for getting something useful, and I got stuck splitting the $(Y-X)^2$ term so any help is welcome.

2nd Added later

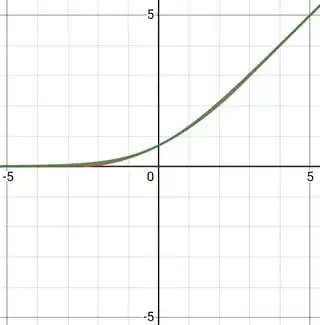

Maybe it could be more useful to approximate the function $\ln(1+e^x)$ through a piecewise function:

$$h(x)=\begin{cases} 0,\quad x\leq -\ln(16)\\ \frac{(x+\ln(16))^2}{4\ln(16)} ,\quad -\ln(16)<x<\ln(16)\\ x,\quad x\geq \ln(16)\end{cases}$$

which plot could be seen in Desmos:

with this, I would have a similar approximation for: $$Z \approx \ln(2)+\frac{Y+X}{2}+\frac{(Y-X)^2}{4\ln(16)}$$

So at least for both approximation the $1st$ order expansion is the same.

Unfortunately it leads to the same issue it got me stuck: how to evaluate the variance of something of the form: $$\text{Var}[aX+bY+cXY+dX^2+eY^2]$$

3rd Added later

I made the question for the variance for the second order expansion here, and it looks that in the best of the scenarios is a nightmare with $+20$ terms.

Since the first order approximation was fruitful for my understanding, and at the end, if one variable grow this mess-of-terms will behave at last just like the variance of the bigger variable, I think it lost the point of what I could gain as increasing the complexity of the analysis. With this I close the question.

4th Added later

I tried to simplify the approximation for splitting $\ln(e^x+e^y)$ but didn't succeed: is too non-linear. I tried here 1, here 2, and here 3, but couldn't find any simpler approximation: the best I could do was to approximate $\ln(2)\approx \frac32$ suggested here by @ClaudeLeibovici, to avoid the fact that $x^{\ln(2)}\equiv 2^{\ln(x)}$ so I was having logarithms popping-up everywhere.

My best shot: $$\ln(e^x+e^y) \approx \frac{xe^{\frac{x}{\ln(2)}}-ye^{\frac{y}{\ln(2)}}}{e^{\frac{x}{\ln(2)}}-e^{\frac{y}{\ln(2)}}}\approx \frac{xe^{\frac{3x}{2}}-ye^{\frac{3y}{2}}}{e^{\frac{3x}{2}}-e^{\frac{3y}{2}}}$$

but is too complicated for me to make any more else.

Fortunately, recently I found a different approximation for: $$\ln(1+e^x) \approx \frac{x}{2}+\frac{1}{2}\sqrt{x^2+(2\ln(2))^2}$$

which leads to a more intuitive approximation of: $$\ln(e^x+e^y)\approx \frac{x+y}{2} +\frac12\sqrt{|x-y|^2+(2\ln(2))^2}$$ which works pretty decent at least from what I see in Desmos3D, and even more, for great differences $|x-y|>> 2\ln(2)\approx 1.39$ one recover the mentioned behavior: $$\ln(e^x+e^y)\approx \frac{x+y}{2}+\frac{|x-y|}{2}=\begin{cases} x,\quad x>y,\quad |x-y|>\approx 2.5\\ y,\quad y>x,\quad |x-y|>\approx 2.5\end{cases}$$

Now I need to figure out how to smartly handle the expected value and variance of the term under the square root, because if I just use a series expansion I end by having the same issues than before:

$$\frac12\sqrt{|x-y|^2+(2\ln(2))^2}\approx \ln(2)+\frac{(x-y)^2}{\ln(256)}+\mathit{O}\left((x-y)^4\right)$$

Later I found in Wikipedia that the last approximation is called Smooth maximum unit.

Here is a Desmos3D comparing each alternative of expansion: at first sight, the $4\ln(16)$ quotient looks fitting for a wider range, but at first underestimate the desired function, then quotient $8$ works always overestimating, but fitting for longer than quotient $\ln(256)$.

Remainder to myself

Two properties I must be aware:

- If $X\sim \text{Normal}$ then $E[e^X]=e^{E[X]+\frac12 V[X]}$

- Isserlis's theorem: If $X\sim \text{Normal}$ then $E[e^{-iX}]=e^{-\frac12 E[X^2]}$

- Stein's lemma: If $X \sim N(\mu,\sigma^2)$ then $E[g(X)(X-\mu)]=\sigma^2 E[g'(X)]$

5th Added later

Thinking in the 2nd order Taylor expansion will somehow tells the difficulty of the terms involved in the approximations: $$Z = \ln(e^X+e^Y) \overset{\text{2nd order Taylor's}}{\approx} \ln(2)+\frac{Y+X}{2}+\frac{(Y-X)^2}{8}$$ I will have that the expected value will be limited to find the terms: $$E[Z] \approx \ln(2)+\frac{E[Y]+E[X]}{2}+\frac{E[(Y-X)^2]}{8}$$

Well, I believe I found a way to improve considerably this approximation by using the same terms: I will use the classic small-angle approximation for the cosine function $\cos(x)\approx 1-\frac{x^2}{2}$ but instead of simplifying, by going in the other way around, and then applying the Isserlis's theorem example I found in Wikipedia:

$$\begin{array}{r c l} Z = \ln(e^X+e^Y) & \approx & \ln(2)+\frac{Y+X}{2}+\frac{(Y-X)^2}{8}\pm 1 \\ & = & 1+\ln(2)+\frac{Y+X}{2}-\underbrace{\left[1-\frac12 \left(\frac{|Y-X|}{2}\right)^2\right]}_{\text{small angle approximation in reverse}}\\ & = & 1+\ln(2)+\frac{Y+X}{2}-\underbrace{\cos\left(\frac{|Y-X|}{2}\right)}_{\text{cosine is an even function}}\\ & = & 1+\ln(2)+\frac{Y+X}{2}-\underbrace{\cos\left(\frac{Y-X}{2}\right)}_{\text{complex-valued expansion}}\\ \Rightarrow Z = \ln(e^X+e^Y) & \approx & \begin{cases} 1+\ln(2)+\frac{Y+X}{2}-\frac12\left[e^{-i\frac{(Y-X)}{2}}+e^{-i\frac{(X-Y)}{2}}\right],\quad \frac{(Y-X)^2}{8} <\approx 3 \\ \max\{X,Y\},\quad \text{otherwise}\end{cases} \end{array}$$

and since when the variables are too different I just have:

$$E[Z] \approx \begin{cases} E[X],\quad (X>Y)\wedge \left(\frac{(Y-X)^2}{8} > 3\right) \\ E[Y],\quad (Y>X)\wedge \left(\frac{(Y-X)^2}{8} > 3\right) \end{cases}$$

I really care about computing accurately when $\frac{(Y-X)^2}{8} \leq 3$, here applying the Expected value jointly with Isserlis's theorem leads to:

$$\begin{array}{r c l} E[Z] = E\left[\ln\left(e^X+e^Y\right)\right]\Biggr|_{\frac{(Y-X)^2}{8} \leq 3} & \approx & 1+\ln(2)+\frac{E[X]+E[Y]}{2}-\frac12\left[e^{-\frac12 E\left[\left(\frac{Y-X}{2}\right)^2\right]}+e^{-\frac12 E\left[\left(\frac{X-Y}{2}\right)^2\right]}\right]\\ & = & 1+\ln(2)+\frac{E[X]+E[Y]}{2}-\frac12\left[\underbrace{e^{-\frac18 E\left[\left(Y-X\right)^2\right]}+e^{-\frac18 E\left[\left(Y-X\right)^2\right]}}_{\text{identicals}}\right]\end{array}$$

$$\Rightarrow \boxed{E[Z]\approx 1+\ln(2)+\frac{E[X]+E[Y]}{2}-e^{-\frac18 E\left[\left(Y-X\right)^2\right]}},\quad \frac{(Y-X)^2}{8} \leq 3$$

And this approximation that works by construction as good as the Taylor's expansion when $X\approx Y$, works even better than all the previous quadratic approximations as both variables $X$ and $Y$ splits apart, but the terms required to calculated are the same as the required for the quadratic approximations, so I increased the range of the representation of the non-linear behavior without adding extra calculations efforts, which is very nice: you could see their behavior in this Desmos3D.

Not that the bound $\frac{(x-y)^2}{8}<3$ is quite fit, since it comes from the fact that since $\ln(e^X+e^Y)=X+\ln(1+e^{Y-X})$ and that:

$$1+\ln(2)+\frac{Y+X}{2}-\cos\left(\frac{Y-X}{2}\right) = 1+\ln(2)+X+\frac{Y-X}{2}-\cos\left(\frac{Y-X}{2}\right)$$

by matching both and making $Y-X = D$ I get:

$$\require{cancel}\cancel{X}+\ln(1+e^{D})=1+\ln(2)+\require{cancel}\cancel{X}+\frac{D}{2}-\cos\left(\frac{D}{2}\right)\Rightarrow D\approx\pm 3.225 $$

as found in Wolfram-Alpha.