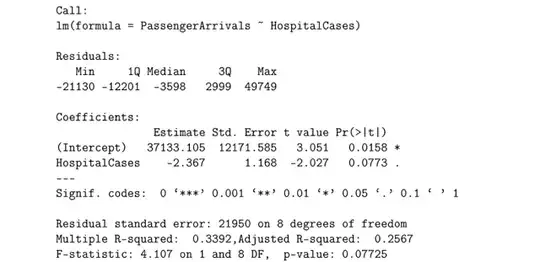

If I have the least squares estimate, can I derive either $s_{xx}$ or $s_{xy}$ from it? For example, given the following linear model:

$$-2.367=\frac{s_{xy}}{s_{xx}}$$ would give me the value for the estimate, however without either $s_{xx}$ or $s_{xy}$ known, I cannot see how I can derive this.

I am testing for the hypothesis that $$\begin{align}H_0&:\beta_1 = -4 \\ H_1&: \beta_1 \ne -4 \end{align}$$

and using the following test statitic $$T = \frac{\hat{\beta_i}-\beta_{i0}}{S\sqrt{c_{ii}}}$$

where $c_{11}= \frac{1}{s_{xx}}$, but I cannot get this from the model shown.

Additional:

$$T = \frac{\hat{\beta_i}-\beta_{i0}}{S\sqrt{c_{ii}}} = \frac{-2.367-(-4)}{1.168\sqrt{c_{11}}}$$

When not including $\sqrt{c_{11}}$ I get $t = 1.398$, with $t_{8, .025}=2.306$, there is weak evidence to reject the null hypothesis that $\beta_1 = -4$. However, I am unsure whether that I can remove $\sqrt{c_{11}}$ from the calculation.