George has defined the processes $[X] ,[X,Y]$ for semimartingales $X,Y$ to be such that $[X] = [X,X]$, and the a.s. integration-by-parts formula $$

XY = X_0Y_0 + \int X_- dY + \int Y_- dX + [X,Y]

$$

holds. Then he proceeds to prove Theorem 1 , which states that $[X,Y]^{P_n} \to [X,Y]$ in the semimartingale topology as $n \to \infty$ where $P_n$ is a sequence of stochastic partitions with mesh size going to zero in probability. The definition of $[X,Y]^{P_n}$ is as per equation $(4)$ of the text.

Therefore, it is wise to go through a proof of Theorem 1 with the details.

We begin with a stochastic partition $P = 0 \leq \tau_0 \leq \tau_1 \leq \ldots \uparrow \infty$ and an arbitrary $t>0$. Note that $4[X,Y] = [X+Y]+[X-Y]$, courtesy the polarization identity that follows from the semimartingale definition, therefore it's sufficient to prove that for a semimartingale $X$ we have $[X]^{P_k} \to [X]$ in the semimartingale topology, where $P_k$ is a sequence of partitions whose mesh converges to zero in probability.

The idea of the proof is to use stochastic calculus and express $[X]_t^P$ as a stochastic integral of an appropriate random process. That process can be understood when we write down the definition of $[X]_t^P$

$$

[X]_t^P = \sum_{n=1}^\infty (X_{\tau_n \wedge t} - X_{\tau_{n-1} \wedge t})^2

$$

If we let $\delta X_n = X_{\tau_n \wedge t} - X_{\tau_{n-1} \wedge t}$ then the above expression involves $(\delta X_n)^2$ so we duly take the square ; not according to $(a-b)^2 = a^2+b^2-2ab$, but rather $(a-b)^2 = a^2-b^2-2b(a-b)$ :$$

(\delta X_n)^2 = X_{\tau_n \wedge t}^2 - X_{\tau_{n-1} \wedge t}^2 - 2 X_{\tau_{n-1} \wedge t}(\delta X_n) \tag{1}

$$

The idea behind this decomposition is that we can bring in a stochastic integral on the RHS, using the difference term $\delta X_n$. The first thing is to deal with the other two terms, though. We see an opportunity to bring in $[X]$ here, and using its definition:$$

X_t^2 = [X]_t^2 +X_0^2 + 2\int_{0}^t X_{s-}dX_s \tag{D}

$$

we use $(D)$ with $t = \tau_n \wedge t, \tau_{n-1} \wedge t$ and subtract those expressions to get $$

X_{\tau_n \wedge t}^2 - X_{\tau_{n-1} \wedge t}^2 = [X]_{\tau_n \wedge t}^2 - [X]^2_{\tau_{n-1} \wedge t} +2\int_0^t X_{s-} 1_{\tau_{n-1} < s \leq \tau_n} dX_s \tag{Q}

$$

Now, we expect $2 X_{\tau_n \wedge t} X_{\tau_{n-1} \wedge t}$ to be the stochastic integral of some process with respect to $X$. A simple look at the definition for elementary processes, tells you that the process $Z_t = 2X_{\tau_{n-1}} 1_{\tau_{n-1} < t \leq \tau_n}$ is elementary and has the property $\int_0^t Z_sds = 2 X_{\tau_{n-1} \wedge t}(\delta X_n)$ a.s. and combining this and $(Q)$ into $(1)$ gives $$

(\delta X_n)^2= [X]_{\tau_n \wedge t}^2 - [X]^2_{\tau_{n-1} \wedge t} +2\int_0^t (X_{s-} - X_{\tau_{n-1}}) 1_{\tau_{n-1} < s \leq \tau_n} dX_s\tag{$E_n$}

$$

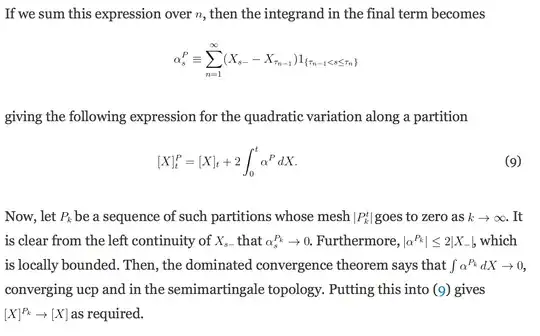

Now, we consider the equality $\sum_{n=1}^\infty E_n$ i.e. that obtained by adding the above equation from $n=1$ to $\infty$ (we'll justify shortly that this is a well defined equality of good stochastic processes). On the LHS of this equality is obtained the quantity $\sum_{n=1}^\infty (\delta X_n)^2$, which we know equals $[X]_t^P$ by definition. We also know that $\sum_{n=1}^\infty ([X]_{\tau_n \wedge t}^2 - [X]^2_{\tau_{n-1} \wedge t}) = [X]_t$ because $\tau_n \uparrow \infty$ a.s. so the series consists of finite terms and stops at $t$ a.s.

What of the stochastic integral? Well, what we know for a fact is that IF we could interchange the limit and the integral, then we can express that entire term as a stochastic integral involving a single process , and we have an identity to work with.

For that, we must use a fact : if we have a locally bounded predictable process, then it is integrable(Lemma 5). As a corollary, the left-limit process of an adapted cadlag process is integrable, because the left limit process of a cadlag process is caglad (it's left continuous with right limits) and all caglad processes are predictable and locally bounded.

We remark that George's proof at this point is perhaps wrong , and suggest a more rigorous fix. Consider the process given by $X^*_t = \sup_{s \leq t} |X_{s}|$. This is the supremum process of the cadlag process $X$, hence it is cadlag itself, and therefore the left-limit process $X^*_{t-}$ is $X$-integrable by the fact we've stated. However, note that $X^*_{t-} = \sup_{s < t} |X_s|$, therefore we have a.s. the inequality $$

\left|\sum_{m=1}^n (X_{s-} - X_{\tau_{m-1}}) 1_{\tau_{m-1} < s \leq \tau_m} \right| \leq |X_{t-}|+|X^*_{t-}|

$$

for all partitions $P$ and all $n$ , where the RHS is an $X$-integrable process. Now, all we need to do is invoke dominated convergence. The first point is that the finite sums $$

\sum_{m=1}^n (X_{s-} - X_{\tau_{m-1}}) 1_{\tau_{m-1} < s \leq \tau_m} \to \sum_{m=1}^\infty (X_{s-} - X_{\tau_{m-1}}) 1_{\tau_{m-1} < s \leq \tau_m} = \alpha_s^P

$$

in probability as $n \to \infty$, for fixed $s$. Indeed, the probability that the difference between the two is non-zero is bounded by the probability that $\tau_{n-1} \leq s$, but that probability goes to $0$ as $n \to \infty$ because $\tau_i \to \infty$ a.s.. By dominated convergence, it follows that $$

\int_{0}^t \sum_{m=1}^n (X_{s-} - X_{\tau_{m-1}}) 1_{\tau_{m-1} < s \leq \tau_m}dX_s \to \int_0^t\sum_{m=1}^\infty (X_{s-} - X_{\tau_{m-1}}) 1_{\tau_{m-1} < s \leq \tau_m} dX_s = \int_0^t \alpha_s^P dX_s

$$

which therefore leads to the identity $$

[X]_t^P = [X]_t + \int_0^t \alpha_s^P dX_s

$$

Now, let $P_k$ be a sequence of partitions whose mesh sizes plummet to $0$ almost surely. We claim that $\alpha_s^{P_k} \to 0$ almost surely (the second point?). To prove this, we'll prove that whenever $P^k(\omega) \to 0$ then $\alpha_s^{P_k}(\omega) \to 0$ (for all $s$).

Consider a sample space element $\omega$ like the above. If the mesh size of $P^k$ is $|P^k|$ then it is clear that $|\alpha^{P_k}_s| \leq \inf_{\epsilon<|P^k|}|X_{s-} - X_{s-\epsilon}|$ for all $s$. In particular, fixing an $s$, for every $\delta>0$ there is a $\delta'$ with $\delta'>s'>0 \implies |X_{s-}-X{s-s'}|<\delta$, and for this $\delta'$ there is a $K$ such that $k>K$ implies $|P^k| < \delta'$. Combining these facts , it is clear that $\alpha^{P_k}_s \to 0$, and $s$ was arbitrary.

Therefore, $\alpha^{P_k}_s \to 0$ a.s. (and therefore in probability). Now, $\alpha_s^{P_k}$ is the limit, in probability , of the sequence of partial sums defining it, and we've already seen that those partial sums are bounded above by an integrable process. Since convergence in probability implies convergence a.s. along a subsequence, it follows that $|\alpha_s^{P_k}| \leq |X_{s-}| + |X^*_{s-}|$ a.s. and by dominated convergence, $\int_0^t\alpha^{P_k}_sdX_s \to 0$ for all $t$ i.e. $[X]^P_t = [X]_t$ for all $t$.

Note that dominated convergence is available in the ucp and semimartingale topologies, therefore the convergence above is also applicable in either notion, although the semimartingale convergence is stronger.

To prove this when $|P_k| \to 0$ in probability, we use the fact that we can extract a subsequence of $P_k$ which goes to $0$ a.s. Therefore ,we get the following result : for any subsequence ${P_{k_n}}$ we can find a further subsequence satisfying $[X]^{P_{k_{n_l}}} \to [X]$. Thus, every subsequence of $[X]^{P_k}$ has a subsequence that converges to $[X]$ in the semi-martingale topology : this is well-known to be equivalent to convergence in the same topology. The proof is quite similar to the following result for metric spaces : if $(X,d)$ is a metric space then a sequence is convergent to $x$ if and only if every subsequence has a further subsequence converging to $x$.