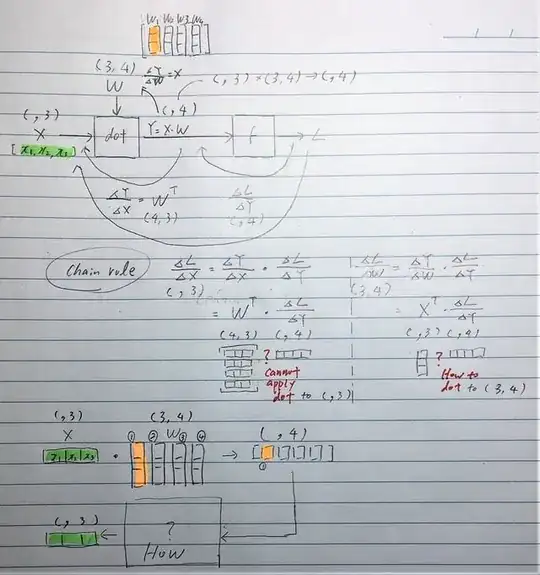

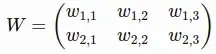

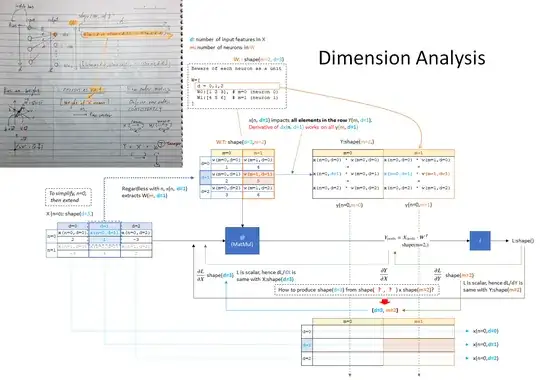

Gradient of $\frac{dL}{dX}$ using chain rule

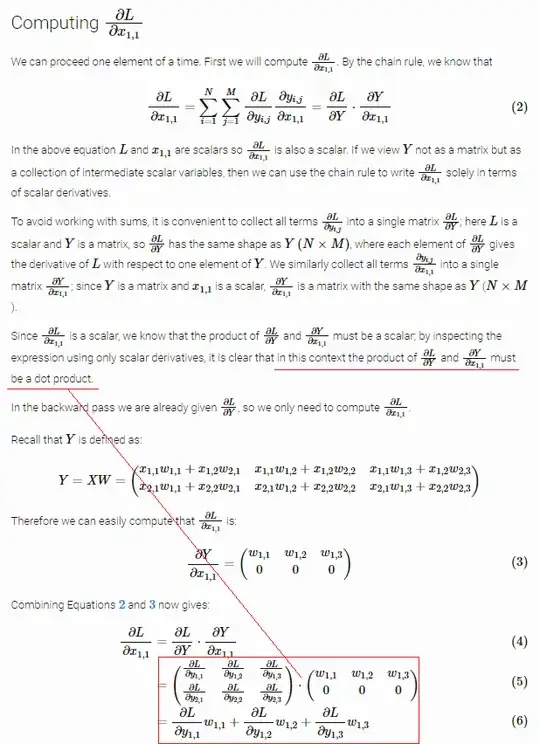

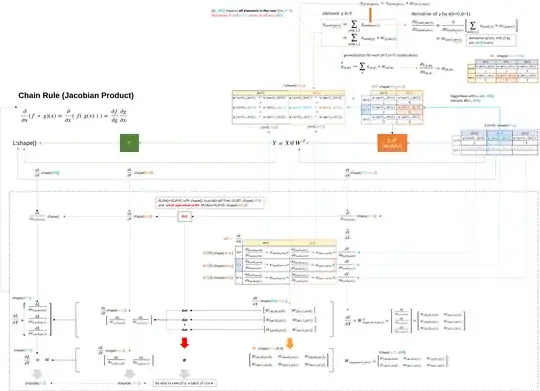

With the chain rule, $\frac{dL}{dX} = \frac{dY}{dX} \cdot \frac{dL}{dY}$, and $\frac{dY}{dX} = W$ for the product Y = X•W.

Q1

I suppose I need to make $\frac{dY}{dX}$ into a transpose $W^\intercal$ to match the shape. For instance, if X shape is (, 3) as per numpy, the last axis of shape($\frac{dY}{dX}$) needs to be 3 (so that $\frac{dY}{dX} \cdot dX^\intercal \rightarrow dY$ : (m,3) • (3,n) → (m, n)?)

However, not sure if this is correct and why, hence appreciate any explanations.

Q2

How can I apply the chain rule formula to matrix?

$\frac{dL}{dX} = W^\intercal \cdot \frac{dL}{dY}$

This cannot be calculated because of the shape mismatch where $W^\intercal$ is (4, 3) and $\frac{dL}{dY}$ is (, 4).

Likewise with $\frac{dL}{dW} = X^\intercal \cdot \frac{dL}{dY}$ because $X^\intercal$ is (,3) and $\frac{dL}{dY}$ is (, 4).

What thinking, rational or transformation can I apply to get over this?

There are typos in the diagram.

(,4)is(4,)etc. In my brain, 1D array of 4 element was(,4)but in NumPy, it is(4,).

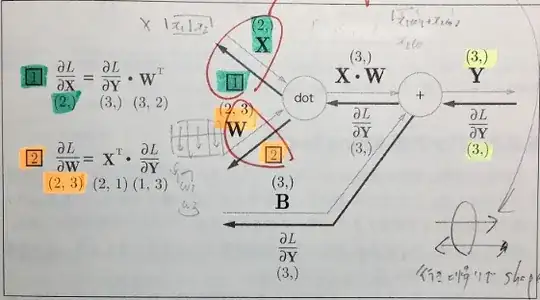

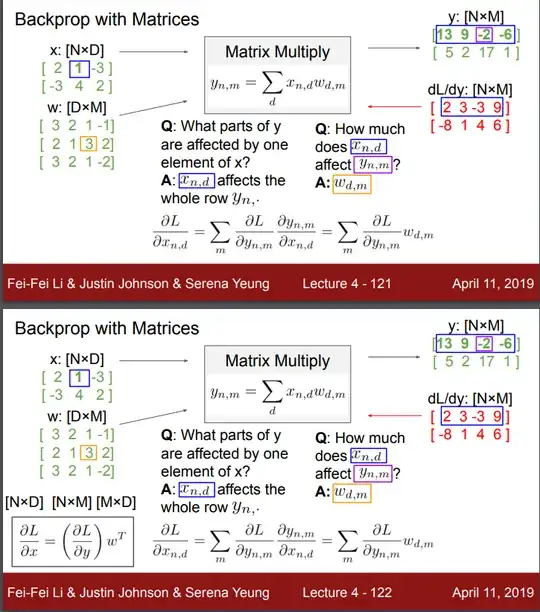

For $\frac{dL}{dX}$

I saw the answer is swapping the positions, but no idea where it came from and why.

$\frac{dL}{dX} = \frac{dL}{dY} \cdot W^\intercal$

Instead of:

$\frac{dL}{dX} = W^\intercal \cdot \frac{dL}{dY}$

For $\frac{dL}{dW}$

The shape of $X^\intercal$ (,3) and $\frac{dL}{dY}$ (, 4) need to be transformed into (3, 1) and (1, 4) to match the shapes, but no idea where it came from and why.

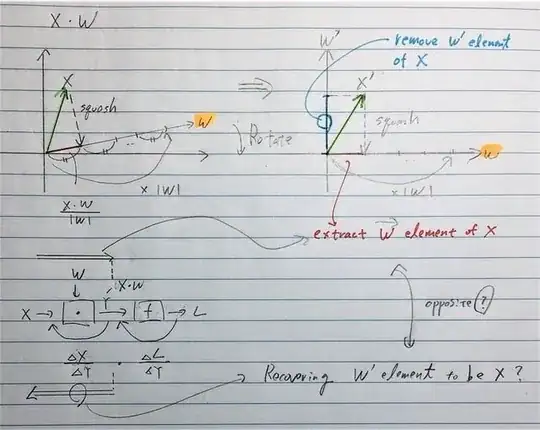

Geometry

In my understanding, X•W is extracting the $\vec{\mathbf{W}}$ dimension part of X by truncating the other dimensions of X geometrically. If so, $\frac{dL}{dX}$ and $\frac{dL}{dW}$ are restoring the truncated dimensions? Not sure this is correct but if so, would it be possible to visualize it like X•W projection in the diagram?