This is an attempt at an alternative proof that $(x_{(1)}, x_{(n)})$ is a minimal sufficient statistic for $(\theta, c)$ using a direct approach.

Consider the likelihood ratio

$$ R(x,y) = \frac{p(x | \theta, c)}{p(y | \theta, c)} $$

where $x = (x_{1}, x_{2}, \dots x_{n}), y = (y_{1}, y_{2}, \dots y_{n})$. The ''factorization'' theorem of minimal sufficient statistics states that if a statistic $T(x)$ has the following property P then it is a minimally sufficient statistic for $\theta$:

P: $R(x, y)$ does not depend on $\theta$ if and only if $T(x) = T(y)$.

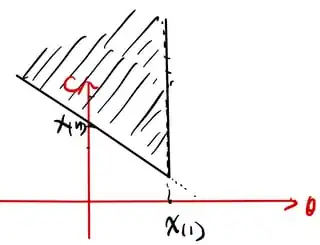

I claim that $T(x) = (x_{(1)}, x_{(n)})$ has this property and therefore is minimally sufficient.

To see this, first consider what $p(x | \theta, c)$ is. I claim that

$$p(x | \theta, c) = c^{-n} I[x_{(1)} \geq \theta, x_{(n)} \leq \theta+c].$$

The indicator $I[x_{(1)} \geq \theta, x_{(n)} \leq \theta+c]$ just determines if $x$ is a valid value in the range; certainly $p(x | \theta, c) = 0$ if $x$ is not within $[\theta, \theta+c]$. Otherwise, since the distribution is uniform we have that $p(x | \theta, c)$ should just be $c^{-n}$ because the density function of each $x_{i}$ is just $c^{-1}$.

Therefore, we have that

$$

R(x, y) = \frac{p(x | \theta, c)}{p(y | \theta, c)} = \frac{I[x_{(1)} \geq \theta, x_{(n)} \leq \theta+c]}{I[y_{(1)} \geq \theta, y_{(n)} \leq \theta+c]}

$$

since the $c^{-n}$ values cancel out.

At this point, I should admit that the factorization theorem is not technically be applicable here, but I'd guess that it is possible to extend that theorem to this situation. Intuitively, we can see that the ratio of $p(x | \theta, c)$ to $p(y | \theta, c)$ is independent of $(\theta, c)$ if and only if $T(x) = T(y)$, despite the technicality that $R(x, y) = \frac{0}{0}$ when both $x$ and $y$ are not in the interval. That is, if we pretend that $\frac{0}{0} = 1$, then $R(x, y) = 1$ whenever $T(x) = T(y)$, whereas it might either be 0 or $+\infty$ when $T(x) \not = T(y)$.