Suppose we have a set of points in $R^d$ and for a given constant $\epsilon>0$ we want to find a hyperplane such that it divides the dataset into two balanced partitions, and that the number of points that are $\epsilon$-close the hyperplane is minimized.

I came up with a formulation of this problem that seems to capture all these constraints and makes the problem a continuous optimization problem.

Suppose we are given $n$ points $x_1,...,x_n\in\mathbb{R}^d$ are our dataset. We parametrize the hyperplane by $w^\top x - b = 0$ in which $w\in\mathbb{R}^d$ is the unit orthogonal vector to the hyperplane and $b$ is the offset. Moreover, we label the points by $y_i\in\{0,1,-1\}$.

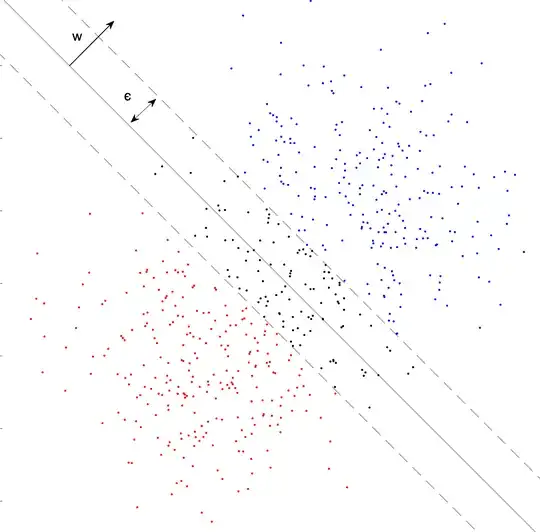

Here is an illustration when $d=2$. Blue, red, and black dots correspond to labels $y_i=1, y_i=-1$, and $y_i=0$ respectively. The goal is to find a line such that the number of blue and red points are equal and the number of black points is minimized.

We can formulate this optimization problem as follows: $$\max_{w,b,y} \sum_i y_i^2 \qquad (1)$$ such that $$\forall i\in[n]: y_i^2(w^\top x_i - b - \epsilon)(w^\top x_i - b + \epsilon) \ge 0 \quad (2)$$ $$\forall i\in[n]: y_i(w^\top x_i - b)\ge 0 \qquad (3)$$ $$y_i\in\{0,1,-1\}, \sum_i^n y_i = 0 \qquad (4)$$ In words, we have two hyperplanes parallel with the offsets $b-\epsilon$ and $b+\epsilon$, and we want to make sure that pionts in between the two are assigned label $y_i=0$ and points on the left and right side of the hyperplanes are assigned $y_i=-1$ and $y_i = 1$ respectively. The maximization in (1) means we want to minimize the number of points that are assigned $y_i=0$ which is what we want. The constraitns $\sum_i^m y_i=0$ makes sure the number points on the left and right sides is balanced. constraint (3) is to make sure points to the left and right don't have the wrong sign (it's similar to SVM constraints). And finally, constraint (2) means that points between the two hyperplanes shifted by $\epsilon$ are set to label $y_i=0$. The reason is that for these points the product $(w^\top x_i - b - \epsilon)(w^\top x_i - b + \epsilon)$ is negative, and $y_i^2$ is also non-negative, so the only way to make the result non-negative is to have $y_i=0$.

One nice property of this formulation is that we can remove $y_i\in\{0,1,-1\}$ and replace it by $-1\le y_i \le 1$, without the risk of getting non-integral solutions. The scetch of the proof of integrality is that, if we suppose we have a non-integer optimal solution $w^*, b^*, y^*$, such that for some $i$ we have $0 < y^*_i < 1$ (or $-1<y^*_i < 0$), because of $\sum_i y_i =0$ there must be some other index $j$ such that $0<y_j<1$ or $-1<y_j < 0$, and there is always a small constant $c$ such that setting $y_i^*\leftarrow y^*_i+c$ and $y^*_j\leftarrow y_j^* - c$, won't violate any constraints while improving the objective (details not discussed for sake of brevity).

However, it seems that the constraint (2) is very non-convex, and it's also degree $4$ w.r.t the variables. Hence the problem can't be solved using quadratic programming off the shelf. Are there any ideas on how this problem can be reformulated to get an optimal solution in polynomial time?

UPDATE I've added a bounty because this problem has very important applications if it can be sollved efficiently. To just briefly mention one, if we have a set of datapoints that need to be distributed on a HPC cluster system, being able to partition them in a balanced way such that they have as little as possible proximity would minimize the communication cost between the nodes. If the exact solution cannot be found, any approximation algorithm that achieves a reasonable bound is also acceptable.