I am using the book Understanding Markov Chain by Nicolas Privault I start having some confusions when it comes to Continuous-Time Markov Chain.

As far as I understand, continuous-time Markov chain is quite similar to discrete-time Markov Chain, except some new formulas to find the stationary distribution by using the infinitesimal Matrix $Q$:$$\pi Q = 0$$

Continuous-Time Markov Chain

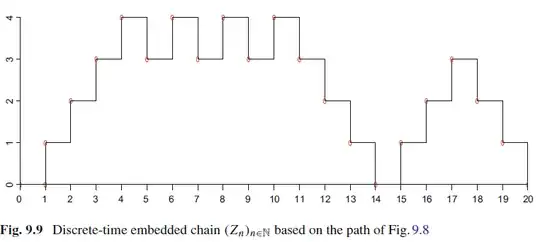

Embedded Chain (by considering only the jumps)

A Concrete example

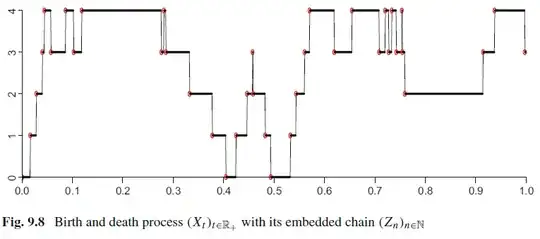

Now, consider a birth and death process $X(t)$ with birth rates $\lambda_n = \lambda$ and death rates $\mu_n = n\mu$. Let $X_n$ be the embedded chain, prove that it has a stationary distribution

$$\pi_n=\frac{1}{2(n!)}(1+\frac{n}{\rho})\rho^ne^{-\rho}$$ where $\rho=\frac{\lambda}{\mu}$

My Insight

By writing out the infinitesimal Matrix and solve for $\hat{\pi} Q = 0$, we get a well known recursive relation for birth and death process.

$$\hat{\pi}_n = \frac{\lambda^{n}}{\mu^{n}n!}\hat{\pi}_0$$

Since a stationary distribution sums up to 1, we need to normalize $\hat{\pi}_n$ in order to get the real $\pi_n$. So we have:

$$\pi_n = \frac{\hat{\pi}_n}{\sum_{i=0}^{\infty}\hat{\pi}_i}$$ Since $\hat{\pi}_0$ appears on both numerator and denominator, we can cancel them out. Also notice that the denominator is actually the Talyor expansion for $e^{\rho}$. Therefore, I got

$$\pi_n=\frac{\rho^n}{e^{\rho}(n!)}$$.

Which is quite similar to the target that we want. But the problem is where are the missing terms? How do we get them back?