Background and original problem:

I want to minimize the difference (error) of a numerical derivative approximation of a function and its true derivative.

Let $f(x) = \sin(x) $ be the anti-derivative of $f'(x)$ and $\delta_h f$ be the approximated differentiation ($\Delta y / \Delta x$) and let $x=2$ for both cases.

I calculate now the difference between the two, and decrease the $\Delta x$ so I will get a better approximation of the derivative at that point.

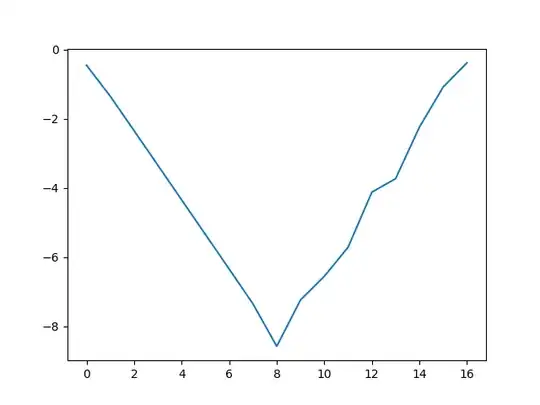

So I plot my difference $ \log_{10}|f' - \delta_h f|$ and the result is the following.

At first it is falling because that's what you'd expect it to do, due to the rising precision of the approximation. Then it rises, presumably due to various rounding errors that happen during the approximation, whereas in the true derivative of $f(x)$ our rounding error is in the margin of error of our machine epsilon, so the increase in error is definitely caused by our approximation and the rounding errors in it.

This is how $f'(x)$ and $\delta_h f $ are defined:

$$f'(x) = \frac{f(x+h) - f(x)}{h} + \mathcal{O}(h) $$ where $\mathcal{O}(h)$ is the magnitude of our machine eps.

$$\delta_h f = \frac{f(x+h) - f(x)}{h} $$

$ h = 10^{-1*k} k \in N $ where k increases with each step on the plot.

Given these formulae we can give the resulting relative error:

$| \mathcal{O}(h) - \frac{f(x+h)}{h}\delta | $

But I don't know any further than this.

I also want to be able to explain how the rounding error of the $\Delta x$ has its effect on the total error.

My exact question is: How can I further formalize and understand the effect of the rounding errors, specifically the subtraction error in the approximation ?

I have tried and got stuck at the above point.