[[This is a rephrasing of this question, intended to be more general.]]

One of the fundamental rules of the mathematical language is that one is allowed to give a name only to a unique object. Now, in probability theory, we can consider examples like conditional expectation and conditional probabilities (wrt to a $\sigma$-algebra) that are defined as any random variable that respect some given statements but that are given names $E(X|\mathcal{F})$ and $P(B|\mathcal{F})$. Another example is the Radon–Nikodym $\frac{d\mu}{d\nu}$ (although this is more generally a concept of measure theory) and more generally probability theory generates a lot of objects that are almost surely unique. Similarly, many statements are "only" almost surely true and it seems to me that the Borel Kolmogorov paradox implies that what happens in a null set can only be consider meaningfull / interesting if one specifies how to reach this null set (so if one specifies sequences / nets / filters of non null sets that in some sense "converge" to it).

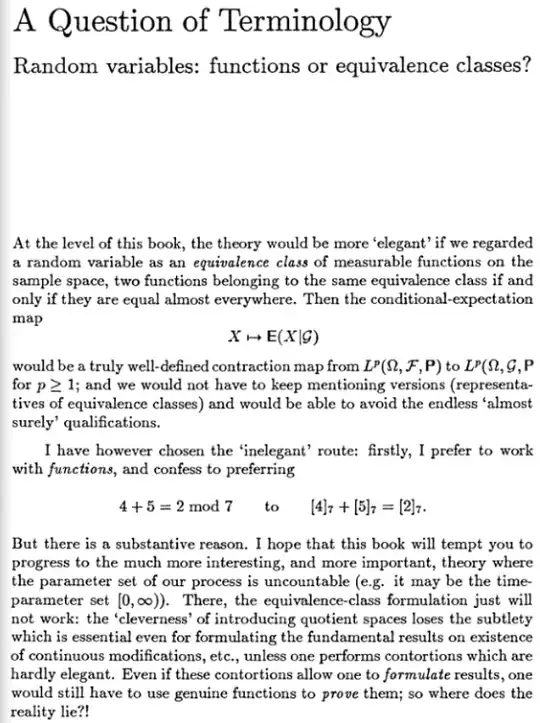

When mathematicians have multiple objects satisfying a given property but do not want to care about this variability, they generally resort to a powerful and amazing concept : quotient set and equivalent classes. This is to my knowledge the canonical tool used every time one wants to forget irrelevant variability. This can also bring unexpected structures out. $L^p$ are only Banach spaces (and $L^2$ is only a Hilbert space) because one does want to make a difference between almost everywhere equal functions.

A $S$-valued random element is traditionally described as a measurable function $X : (\Omega, \sigma_\Omega, P) \mapsto (S, \sigma_S)$. Here I consider with $S$ all type of spaces, including functional spaces. So the definition here is intended to encompasses all types of random variables, stochastic processes, random fields, point processes, random measures, random set, etc. In the end, why hasn't any attempt to simplify the entire theory of probability by considering equivalent classes wherever possible (which seems everywhere) and then defining any kind of $S$-valued random element as an equivalent class of almost surely equal random function on $S$?

What could be a fundamental obstacle (mathematically speaking, I'm not considering social traditions) to such an approach, or what relevant information might be lost with it ? It seems to me that most of the current phrasing of probability theory (and I don't even mention statistics) is laborious. (As if contrary to Algebraic geometry for example, it had just not found its Grothendieck yet)