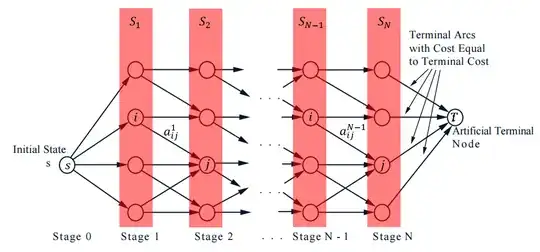

Dynamic programming usually works "backward" - start from the end, and arrive at the start. This works both when there is and when there isn't uncertainty in the problem (e.g. some noise in the state). The backward DP algorithm is then (for the case of no noise):

Initialization: $$J_N(i)=a_{iT}^N\quad i\in S_N$$

Iteration: $$J_k(i)=\min_{j\in S_{k+1}}\left( a_{ij}^k+J_{k+1}(j) \right)\quad i\in S_k,\quad k=N-1,N-2,...,0$$

For deterministic (no uncertainty) problems, we can reverse the algorithm and say that we can do the same thing starting from the start and ending at the end, and that the solution will be the same - this is forward DP. By transforming the above-written backward DPA, we obtain the forward DP algorithm (by flipping the arrow directions in the above figure):

Initialization: $$\tilde J_N(j)=a_{sj}^0\quad j\in S_1$$

Iteration: $$\tilde J_k(j)=\min_{i\in S_{N-k}}\left( a_{ij}^{N-k}+\tilde J_{k+1}(i) \right)\quad j\in S_{N-k+1},\quad k=N-1,N-2,...,0$$

However, I don't understand why the above cannot be applied also to stochastic problems, i.e. Thank you for your help!

In the deterministic case: The forward algorithm has the interpretation that the cost function at each state is the cheapest way to get to that state (starting from the beginning). However, in the stochastic case, how do we make sure we really get to that state (given the state transitions are random)?

– Michael Oct 17 '15 at 15:18