I have a dataset that has the following columns:

The variable I'm trying to predict is "rent".

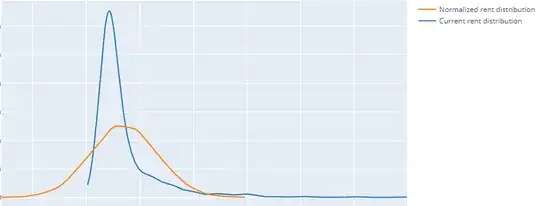

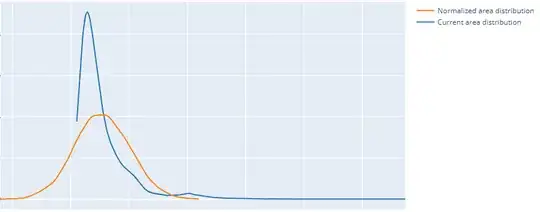

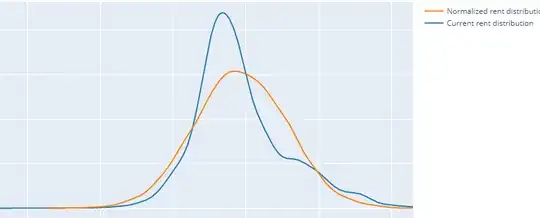

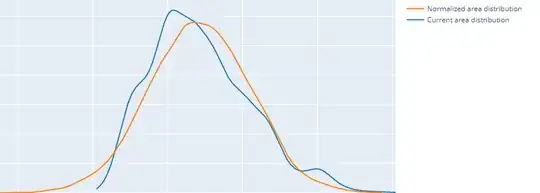

My dataset looks a lot similar to what happens in this notebook. I tried to normalize the rent column and the area column using log transformation since both columns had a positive skewness. Here's the rent column and area column distribution before and after the log transformation.

Before:

After:

I thought after these changes my regression models would improve and in fact they did, except for Linear Regression.

If I don't do any type of transformations the models underperform. When I only transform the rent column all models improve including Linear Regression, but when I transform the rent column and the area column Linear Regression has a terrible result with a MAPE of 2521729.47.

Not transforming area MAPE results:

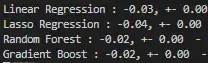

Transforming area MAPE results:

Can anyone tell me what's probably happening or guide me through any type of testing or verifications to understand what's happening to linear regression? Am I wrong to transform those columns even if the models are improving?

Edit:

After testing the models by removing and adding columns, I found that linear regression goes crazy after I insert the neighborhood column (which contains 66 neighborhoods) and create dummy columns. When I create this dummy variables the number of columns goes to 77, while the dataset has only around 3000 rows.

My thoughts are that after transforming the column into dummy columns the data becomes very sparse and with too many features for only 3000 rows, and that's why Linear Regression has this bad performance and Lasso Regression doesn't. Besides that, I should probably still use the other models since they perform well after the changes.

Am I correct?