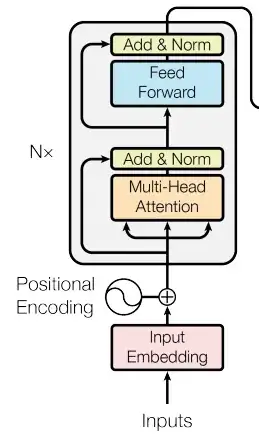

I am hand-coding a transformer (https://arxiv.org/pdf/1706.03762.pdf) based primarily on the instructions I found at this blog: http://jalammar.github.io/illustrated-transformer/.

The first attention block takes matrix input of the shape [words, input dimension] and multiplies by the attention weight matrices of shape [input dimension, model dimension]. The model dimension is chosen to be less than the input dimension and is the dimension used as output in all subsequent steps.

There is a residual connection around the attention block and the input is meant to be added to the output of the attention block. However the output of the attention block is shape [words, model dimension] and the input is form [words, input dimension]. Should I interpolate the input down to the model dimension as is done in ResNet? Or maybe add another weight matrix to transform the input?