I am trying to perform regression using XGBoost. My dataset has all positive values but some of the predictions are negative.

I read on this link that reducing the number of trees might help the situation.

I reduced the estimators from 700 to 570 and the number of negative predictions decreased but is there any way to remove these negative predictions? When I further tried reducing the estimators to 400, I got worse results with high rmse and more negative predictions.

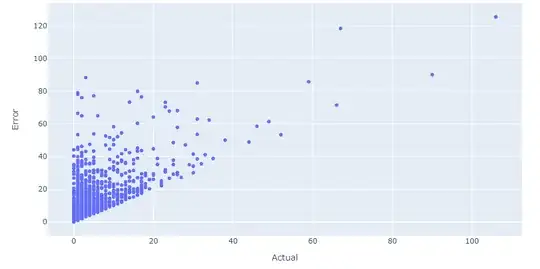

I looked at the negative predictions to understand where the model went wrong(see below image):

It looks like if I take the absolute value of the negative predictions then that would be sufficient but is this the right method?

My code is:

!pip install xgboost

!pip install scikit-optimize

from xgboost import XGBRegressor

final_XGB=XGBRegressor(random_state=123,gamma= 24.47 ,learning_rate=0.1235,max_depth=10,min_child_weight=0.21509999999999999,

n_estimators=570,subsample=0.74,reg_lambda=0.8)

from sklearn.model_selection import cross_validate

cross_val_scores=cross_validate(final_XGB,X_train,y_train,cv=3,scoring=['neg_mean_squared_error','r2'],verbose=1,return_train_score=True,n_jobs=-1 )

cross_val_scores['test_r2'].mean() #returns 0.9595609470775659

EDIT:

A bit more about the dataset. I am trying to predict the number of people that would be present at a given location at a given time period.

In order to predict the count of people, I have taken the

- hour of day,

- date,

- peak(is it a public holiday?),

- Weekend(1/0),

- Area (area of location in m2)

- location name.

The location names were string values (eg: Location1, Location2, etc.) so I transformed them using JamesSteinEncoder.

There are no missing values and I have removed a few outliers from the dataset.

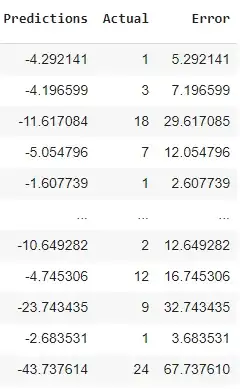

Regarding the relationship between "Actual" and "Error" column, I have plotted the graph below:

Snapshot of final results: