In mathematics and machine learning, a dataset is considered linearly separable if there exists at least one hyperplane that can completely separate the data points of different classes without any overlap or misclassification. This means that, in an ( n )-dimensional space, a hyperplane (which is an ( (n-1) )-dimensional affine subspace) should be able to create a partition that places all the points belonging to one class on one side and the points belonging to another class on the opposite side.

Let’s go deeper into why the existence of multiple hyperplanes still aligns with the concept of linear separability.

1. Existence of Multiple Hyperplanes

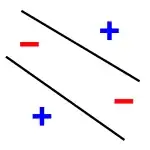

Linear separability doesn't mean there's only one hyperplane that separates the classes. It just means that at least one hyperplane must exist. In fact, when a dataset is linearly separable, there are often many hyperplanes that can do the job. You can imagine moving or rotating the hyperplane slightly, and as long as it continues to separate the classes correctly, it is still a valid solution.

So, having multiple such hyperplanes doesn’t contradict the definition; it just shows that there is flexibility in choosing which one to use.

2. Non-Linearly Separable Case and Multiple Hyperplanes

If the dataset requires multiple hyperplanes to separate the classes, it suggests that no single linear function (hyperplane) can separate the classes completely. This situation occurs when the dataset is non-linearly separable. In such cases, a single hyperplane is not enough, and you need to use multiple boundaries (hyperplanes) or transform the data to a higher dimension to achieve separation (like using kernel methods in Support Vector Machines).

3. Mathematical Interpretation: Decision Function and Loss Minimization

When we want to check if a dataset is linearly separable, we are trying to find a decision function—usually a linear function in the form:

f(\mathbf{x}) = \mathbf{w}^T \mathbf{x} + b

This function classifies the data based on parameters (\mathbf{w}) and (b) (the weight vector and bias). The goal is to find these parameters in a way that minimizes classification errors using a single loss function.

However, if you need multiple hyperplanes simultaneously (e.g., intersecting or separate hyperplanes), it means you'd have to minimize multiple loss functions at once. Mathematically, this is a problem because it’s impossible to minimize two or more separate loss functions simultaneously for a single decision boundary. This shows that the data is not linearly separable because no single linear function can separate the classes.

4. Geometric Intuition and Higher-Dimensional Embedding

If you find that multiple hyperplanes are needed, it might mean that the data is actually separable in a higher-dimensional space. Techniques like kernel methods or neural networks can transform the data into a space where a single hyperplane can separate the classes. This shows that the data is non-linear in the original space and needs transformation to become linearly separable.

In summary, if a dataset needs multiple hyperplanes for separation, it is not linearly separable by definition. Linear separability requires the existence of a single hyperplane that does the job, even though many such hyperplanes might exist. Using multiple hyperplanes implies that the data is non-linear and needs more complex decision boundaries.