Question: I want to implement a decision tree with each leaf being a linear regression, does such a model exist (preferable in sklearn)?

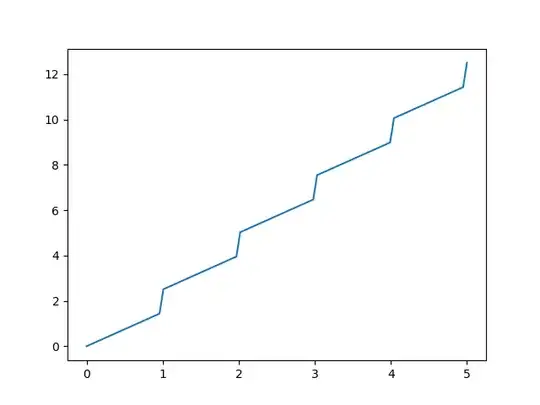

Example case 1:

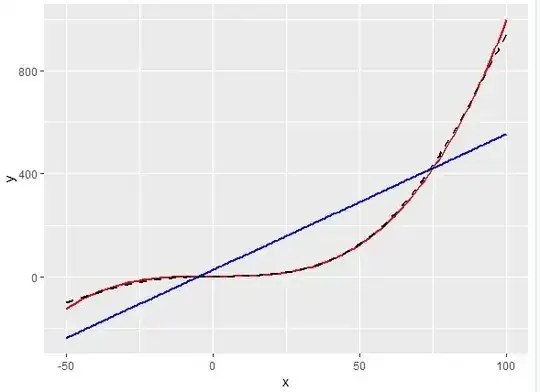

Mockup data is generated using the formula:

y = int(x) + x * 1.5

Which looks like:

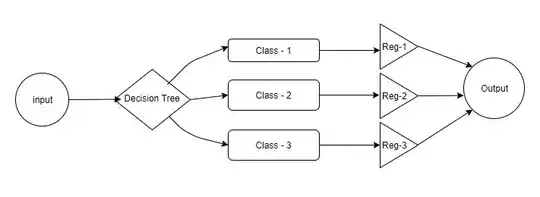

I want to solve this using a decision tree where the final decision results in a linear formula. Something like:

- 0 <= x < 1 -> y = 0 + 1.5 * x

- 1 <= x < 2 -> y = 1 + 1.5 * x

- 2 <= x < 3 -> y = 2 + 1.5 * x

- etc.

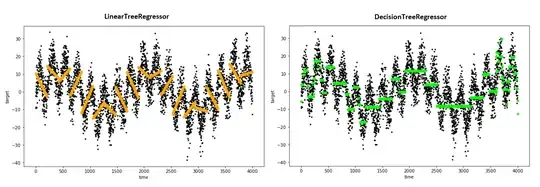

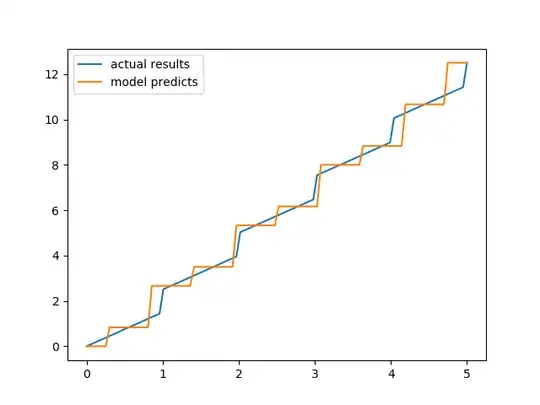

Which I figured could best be done using a decision tree. I've done some googling and I thought the DecisionTreeRegressor from sklearn.tree could work, but that results in points being assigned a constant value in a range, as shown below:

Code:

import matplotlib.pyplot as plt

import numpy as np

from sklearn.tree import DecisionTreeRegressor

x = np.linspace(0, 5, 100)

y = np.array([int(i) for i in x]) + x * 1.5

x_train = np.linspace(0, 5, 10)

y_train = np.array([int(i) for i in x_train]) + x_train * 1.5

clf = DecisionTreeRegressor()

clf.fit(x_train.reshape((len(x_train), 1)), y_train.reshape((len(x_train), 1)))

y_result = clf.predict(x.reshape(len(x), 1))

plt.plot(x, y, label='actual results')

plt.plot(x, y_result, label='model predicts')

plt.legend()

plt.show()

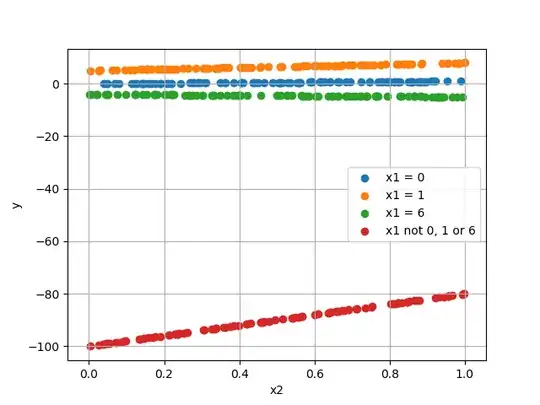

Example case 2: Instead of one input, there are two inputs: x1 and x2, output is computed by:

- x1 = 0 -> y = 1 * x2

- x1 = 1 -> y = 3 * x2 + 5

- x1 = 6 -> y = -1 * x2 -4

- else -> y = x2 * 20 - 100

Code:

import matplotlib.pyplot as plt

import random

def get_y(x1, x2):

if x1 == 0:

return x2

if x1 == 1:

return 3 * x2 + 5

if x1 == 6:

return - x2 - 4

return x2 * 20 - 100

X_0 = [(0, random.random()) for _ in range(100)]

x2_0 = [i[1] for i in X_0]

y_0 = [get_y(i[0], i[1]) for i in X_0]

X_1 = [(1, random.random()) for _ in range(100)]

x2_1 = [i[1] for i in X_1]

y_1 = [get_y(i[0], i[1]) for i in X_1]

X_2 = [(6, random.random()) for _ in range(100)]

x2_2 = [i[1] for i in X_2]

y_2 = [get_y(i[0], i[1]) for i in X_2]

X_3 = [(random.randint(10, 100), random.random()) for _ in range(100)]

x2_3 = [i[1] for i in X_3]

y_3 = [get_y(i[0], i[1]) for i in X_3]

plt.scatter(x2_0, y_0, label='x1 = 0')

plt.scatter(x2_1, y_1, label='x1 = 1')

plt.scatter(x2_2, y_2, label='x1 = 6')

plt.scatter(x2_3, y_3, label='x1 not 0, 1 or 6')

plt.grid()

plt.xlabel('x2')

plt.ylabel('y')

plt.legend()

plt.show()

So my question is: does a decision tree with each leaf being a linear regression, exist?