I am a little confused about taking averages in cost functions and SGD. So far I always thought in SGD you would compute the average error for a batch and then backpropagate it. But then I was told in a comment on this question that that was wrong. You need to backpropagate the error of every item in the batch individually, then average the gradients you computed through the backpropagation and then update your parameters with the scaled average gradient.

Okay, but why is that not actually the same thing? Isn't the gradient of the average of some points the average of the gradient at these points?

The idea behind SVD is to find the minimum of a cost function $J(\theta)$ of a subset of training items. The cost function is usually defined as the average of some function $J_t(\theta)$ of the errors of the individual predictions and targets for a training item. Let's take MSE as an example. So if we have a batch of $N$ items, we have

$$J(\theta) : X, Y \mapsto \frac{1}{N} \sum_{i=1}^N (y_i -f(x_i))^2$$

And we want to minimize $J(\theta)$. So we need to find its gradient:

$$\nabla \frac{1}{n}\sum_{i=1}^{n} (y_i - f(x_i))^2$$

But the derivative is linear, so

$$\nabla \frac{1}{n}\sum_{i=1}^{n} (y_i - f(x_i))^2 = \frac{1}{n}\sum_{i=1}^{n} \nabla (y_i - f(x_i))^2$$

What am I doing wrong here?

Another example. Say we do linear regression with an $m x + b$ line fit. Then the partial derivatives taken for $m$ and $b$ are

\begin{align*} \frac{\partial J(\theta)}{\partial m} &= \frac{1}{N} \frac{\partial}{\partial m} \sum_{i=1}^N (y_i -f(x_i))^2 & \texttt{factor rule}\\ &= \frac{1}{N} \sum_{i=1}^N \frac{\partial}{\partial m} (y_i -f(x_i))^2 & \texttt{sum rule}\\ &= \frac{1}{N} \sum_{i=1}^N 2(y_i -f(x_i)) \frac{\partial}{\partial m} y_i -f(x_i) & \texttt{chain rule}\\ &= \frac{1}{N} \sum_{i=1}^N 2(y_i -f(x_i)) \frac{\partial}{\partial m} y_i - (mx_i + b) & \texttt{definition } f\\ &= \frac{1}{N} \sum_{i=1}^N 2(y_i -f(x_i)) (-x_i) & \texttt{}\\ &= -\frac{2}{N} \sum_{i=1}^N x_i(y_i -f(x_i)) & \texttt{comm., distr.}\\ \end{align*}

\begin{align*} \frac{\partial J(\theta)}{\partial b} &= \frac{1}{N} \frac{\partial}{\partial b} \sum_{i=1}^N (y_i -f(x_i))^2 & \texttt{factor rule}\\ &= \frac{1}{N} \sum_{i=1}^N \frac{\partial}{\partial b} (y_i -f(x_i))^2 & \texttt{sum rule}\\ &= \frac{1}{N} \sum_{i=1}^N 2(y_i -f(x_i)) \frac{\partial}{\partial b} y_i -f(x_i) & \texttt{chain rule}\\ &= \frac{1}{N} \sum_{i=1}^N 2(y_i -f(x_i)) \frac{\partial}{\partial b} y_i - (mx_i + b) & \texttt{definition } f\\ &= \frac{1}{N} \sum_{i=1}^N 2(y_i -f(x_i)) (-1) & \texttt{}\\ &= -\frac{2}{N} \sum_{i=1}^N (y_i -f(x_i)) & \texttt{comm., distr.}\\ \end{align*}

I don't see an error here and the gradient descent also works with these partial derivatives (tested through implementation). So... what am I missing?

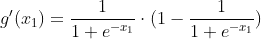

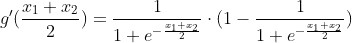

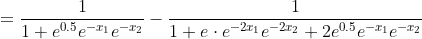

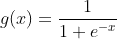

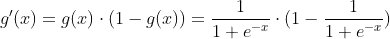

and calculate the mean of the sigmoid's gradient with respect to them:

and calculate the mean of the sigmoid's gradient with respect to them: