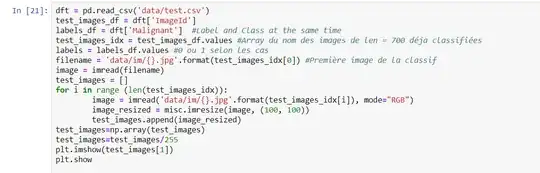

I am trying to classify images and assign them label 1 or 0. (Skin cancer or not).

I am aware of the three main issues regarding having the same output in every input.

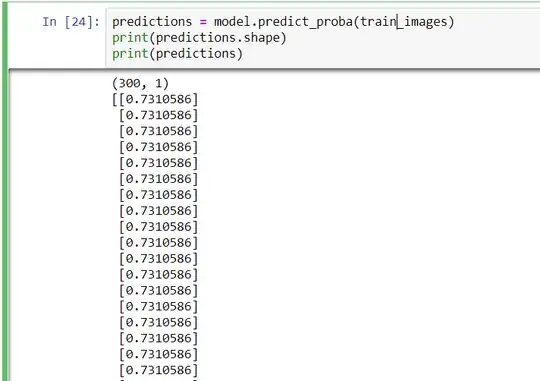

I did not split the set and I'm just trying to apply the CNN on the train set, I know it doesn't make sense but it's just to verify how it's working. (Predicting on the unlabeled data gives exact same probability)

I have verified the three main points:

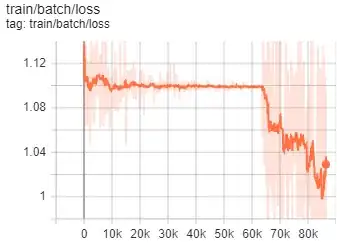

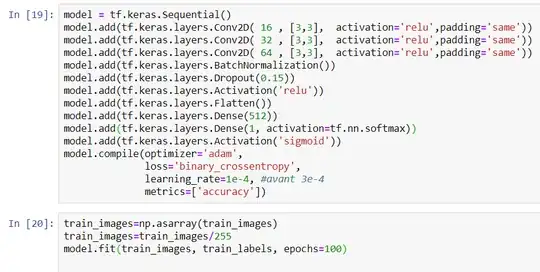

1: Scaling the date (both image size and pixel intensity values) 2: Taking a low learning rate 3: I only tried with small epochs 6 at most because of the computation time, is it worth it to let it run one day just to see results with more epochs ?

Anyway I can't understand how a bad training could lead the network to give same class probability every time ?

I tried the on batch options etc.. doesn't change anything.

Accuracy is very low as this kind of classification is not really suited for CNNs but this shouldn't explain the weird result though.

Here are different parts of the program:

Model :

Thanks for help and sorry for the ugly screenshots.