In my CNN model, by using large number of epochs like 400 or above, the validations accuracy and some times test accuracy gets better, but I think this large number of epochs is not good idea? I am right or not? why?

3 Answers

If your model is still improving (according to the validation loss), then more epochs are better. You can confirm this by using a hold-out test set to compare model checkpoints e.g. at epoch 100, 200, 400, 500.

Normally the amount of improvement reduces with time ("diminishing returns"), so it is common to stop once the curves is pretty-much flat, for example using EarlyStopping callback.

Different model requires different times to trains, depending on their size/architecture, and the dateset. Some examples of large models being trained on the ImageNet dataset (~1,000,000 labelled images of ~1000 classes):

- the original YOLO model trained in 160 epochs

- the ResNet model can be trained in 35 epoch

- fully-conneted DenseNet model trained in 300 epochs

The number of epochs you require will depend on the size of your model and the variation in your dataset.

The size of your model can be a rough proxy for the complexity that it is able to express (or learn). So a huge model can represent produce more nuanced models for datasets with higher diversity in the data, however would probably take longer to train i.e. more epochs.

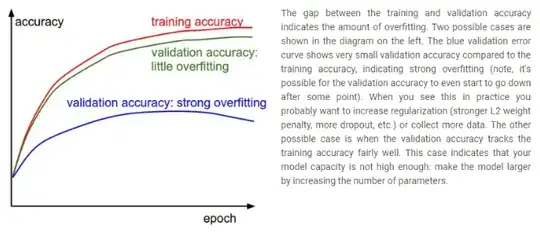

Whilst training, I would recommend plotting the training and validation loss and keeping an eye on how they progress over epochs and also in relation to one another. You should of course expect both values to decrease, but you need to stop training once the lines start diverging - meaning that you are over-fitting to your specific dataset.

That is likely to happen if you train a large CNN for many epochs, and the graph could look something like this:

- 15,468

- 2

- 33

- 52

Assuming you track the performance with a validation set, as long as validation error is decreasing, more epochs are beneficial, model is improving on seen (training) and unseen (validation) data.

As soon as validation error starts to increase, it signals that model is over-fitting on training data, thus the learning process should be stopped. This method is independent of the details of a model.

In practice, save the model corresponding to the best validation error found so far, and stop the process only when validation error is consistently increasing. This can be done manually or with an ad-hoc threshold based on the distance of current validation error from the minimum error so far. This way, learning will not be stopped prematurely due to fluctuations in validation error.

- 9,553

- 2

- 34

- 49

It depends…

Smaller batch size will have increased performance on evaluation metrics (e.g., accuracy) because the model will make more frequent updates to the parameters.

Larger batch size will increase throughput (e.g., the number of images processed per second).

The trade-off often looks like a small drop in evaluation metrics with a large gain in throughput for increases in batch size. Thus if absolute performance on evaluation metrics is the only criteria, use small batches and take a long training time. If a dip in evaluation metrics is okay, you can greatly speed-up training by increasing batch size. For example, many applied models can be trained with batch sizes of 2048.

Overall, it is best to pick a multiple of 32 (32, 64, 256, 2048) in order to maximize the speed of data loading. The choice of which multiple of 32 often times depends on the architecture of the hardware that the model is training on.

It would be a good idea to empirically benchmark your model's performance, both evaluation metrics and training time, for different batch sizes.

- 23,131

- 2

- 29

- 113