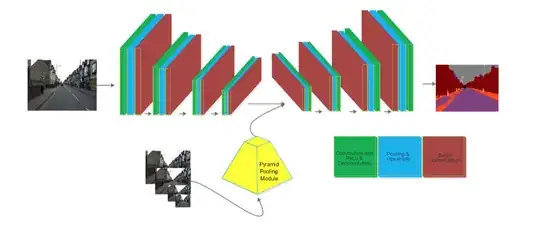

I designed a specific Convolution Neural Network to study in the area of image processing. The network has a part that there are two tensors that have to be transformed into a tensor in order to be fed to the next layer. This situation happens at several points of the network. In fact, there are several operations such as addition, multiplication, etc. The results of the network are a bit better when I use the addition pyramid pooling module (the second image between two convolutions) and multiply function (in the last step of the network). I used tf.math.add and tf.math.multiply which perform the operation element-wisely. The whole network is shown in the first image.

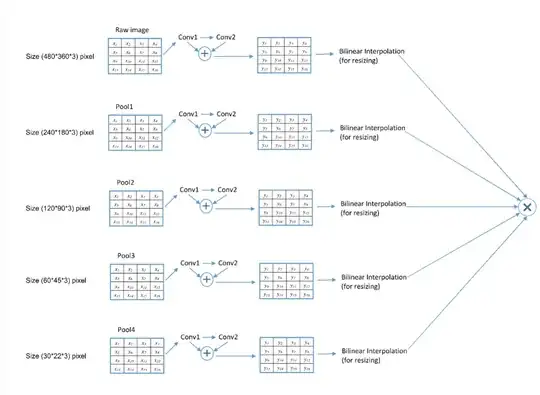

The second image represents the pyramid pooling module which includes several scale images.

I am looking forward to the addition and multiplication function's attribute in a deep neural network.

The question is:

Why does the addition function (between conv1 and conv2) indicate better final performance in Accuracy (precision) and mean Intersection of Union(mIoU) compared to multiplication and concatenation when I unify two tensors into one tensor?