I need your help to find a flaw in my model, since it's accuracy (95%) is not realistic.

I'm working on a classification problem using Randomforest, with around 2500 positive case and 15000 negative ones, 75 independent variables. Here's the core of my code:

# Splitting the dataset into the Training set and Test set

from sklearn.cross_validation import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.25, random_state = 0)

# Fitting Random Forest Classification to the Training set

from sklearn.ensemble import RandomForestClassifier

classifier = RandomForestClassifier(n_estimators = 900, criterion = 'gini', random_state = 0)

classifier.fit(X_train, y_train)

# Predicting Test set results

y_pred = classifier.predict(X_test)

# Making the Confusion Matrix

from sklearn.metrics import confusion_matrix

cm = confusion_matrix(y_test, y_pred)

I've optimized the hyperparameters through grid search and performed a k-fold cross validation, reporting 0.9444 as accuracies mean. Confusion matrix:

[[3390, 85],

[ 101, 516]]

showing 97.6% accuracy.

Did I miss something?

NOTE: the database is composed by 2500 Italian mafia firms' financial reports, and 15000 lawful firms randomly sampled from the same regions as negative cases.

Thank you guys!

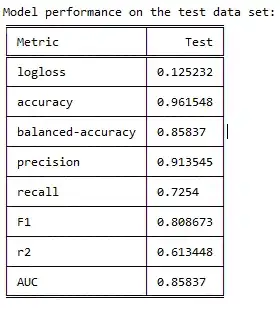

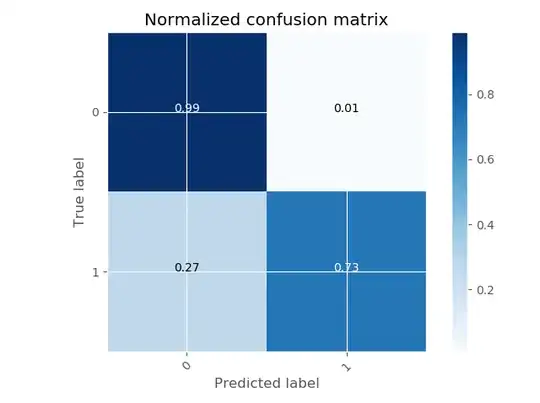

EDIT: I upload the metrics and cm. The model is actually performing well, but looking at the metrics and cm, it shows more realistic values regarding logloss and recall, so I assume it is fine.