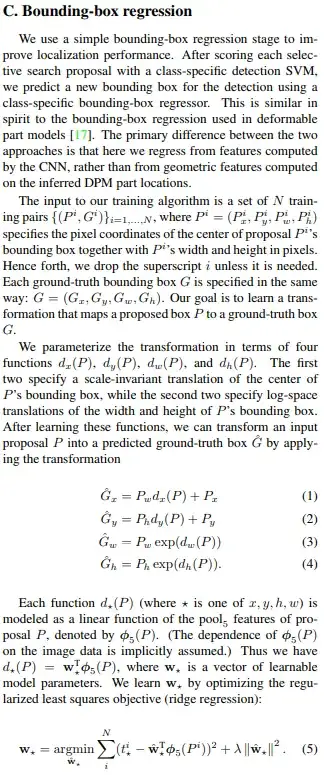

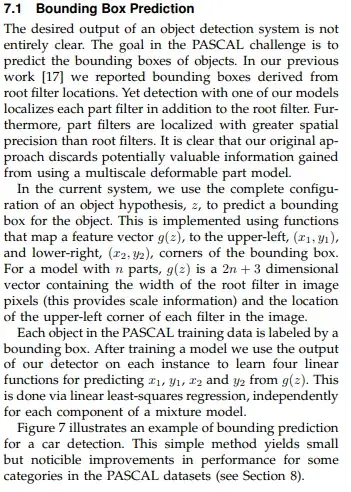

In the fast R-CNN paper (https://arxiv.org/abs/1504.08083) by Ross Girshick, the bounding box parameters are continuous variables. These values are predicted using regression method. Unlike other neural network outputs, these values do not represent the probability of output classes. Rather, they are physical values representing position and size of a bounding box.

The exact method of how this regression learning happens is not clear to me. Linear regression and image classification by deep learning are well explained separately earlier. But how the linear regression algorithm works in the CNN settings is not explained so clearly.

Can you explain the basic concept for easy understanding?