I want to do one-step-ahead predictions for time series with LSTM. To understand the algorithm, I built myself a toy example: A simple autocorrelated process.

def my_process(n, p, drift=0, displacement=0):

x = np.zeros(n)

for i in range(1, n):

x[i] = drift * i + p * x[i-1] + (1-p) * np.random.randn()

return x + displacement

Then I built an LSTM model in Keras, following this example.

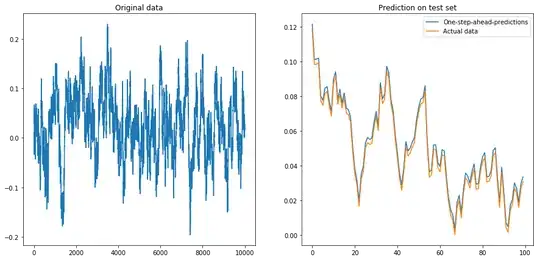

I simulated processes with high autocorrelation p=0.99 of length n=10000, trained the neural network on the first 80% of it and let it do one-step-ahead predictions for the remaning 20%.

If I set drift=0, displacement=0, everything works fine:

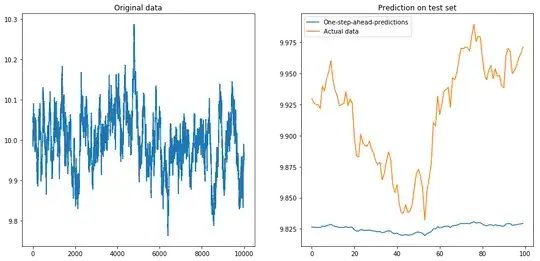

Then I set drift=0, displacement=10 and things went pear-shaped (notice the different scale on the y-axis):

This is not terribly surprising: LSTMs should be fed with normalized data!

So I normalized the data by rescaling it to the interval $[-1, 1]$.

Phew, things are fine again:

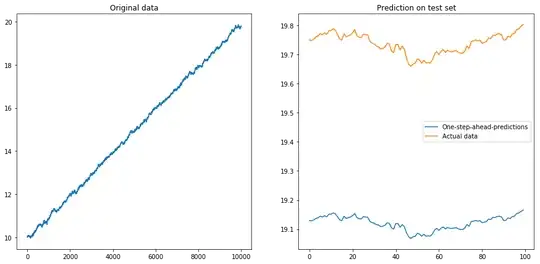

Then I set drift=0.00001, displacement=10, normalized the data again and ran the algorithm on it.

This does not look good:

Apparently the LSTM cannot deal with a drift.

What to do? (Yes, in this toy example I could simply subtract the drift; but for real-world time series, this is much harder).

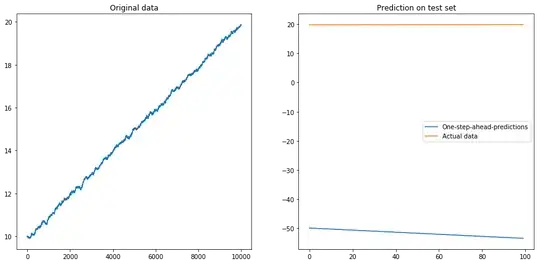

Maybe I could run my LSTM on the difference $X_{t} - X_{t-1}$ instead of the original time series $X_t$. This will remove any constant drift from the time series.

But running the LSTM on the differenced time series does not work at all:

My question: Why does my algorithm break down when I use it on the differenced time series? What is a good way to deal with drifts in time series?

Here is the full code for my model:

import numpy as np

import matplotlib.pyplot as plt

np.random.seed(42)

from keras.layers.core import Dense, Activation, Dropout

from keras.layers.recurrent import LSTM

from keras.models import Sequential

# The LSTM model

my_model = Sequential()

my_model.add(LSTM(input_shape=(1, 1), units=50, return_sequences=True))

my_model.add(Dropout(0.2))

my_model.add(LSTM(units=100, return_sequences=False))

my_model.add(Dropout(0.2))

my_model.add(Dense(units=1))

my_model.add(Activation('linear'))

my_model.compile(loss='mse', optimizer='rmsprop')

def my_prediction(x, model, normalize=False, difference=False):

# Plot the process x

plt.figure(figsize=(15, 7))

plt.subplot(121)

plt.plot(x)

plt.title('Original data')

n = len(x)

thrs = int(0.8 * n) # Train-test split

# Save starting values for test set to reverse differencing

x_test_0 = x[thrs + 1]

# Save minimum and maximum on test set to reverse normalization

x_min = min(x[:thrs])

x_max = max(x[:thrs])

if difference:

x = np.diff(x) # Take difference to remove drift

if normalize:

x = (2*x - x_min - x_max) / (x_max - x_min) # Normalize to [-1, 1]

# Split into train and test set. The model will be trained on one-step-ahead predictions.

x_train, y_train, x_test, y_test = x[0:(thrs-1)], x[1:thrs], x[thrs:(n-1)], x[(thrs+1):n]

x_train, x_test = x_train.reshape(-1, 1, 1), x_test.reshape(-1, 1, 1)

y_train, y_test = y_train.reshape(-1, 1), y_test.reshape(-1, 1)

# Fit the model

model.fit(x_train, y_train, batch_size=200, epochs=10, validation_split=0.05, verbose=0)

# Predict the test set

y_pred = model.predict(x_test)

# Reverse differencing and normalization

if normalize:

y_pred = ((x_max - x_min) * y_pred + x_max + x_min) / 2

y_test = ((x_max - x_min) * y_test + x_max + x_min) / 2

if difference:

y_pred = x_test_0 + np.cumsum(y_pred)

y_test = x_test_0 + np.cumsum(y_test)

# Plot estimation

plt.subplot(122)

plt.plot(y_pred[-100:], label='One-step-ahead-predictions')

plt.plot(y_test[-100:], label='Actual data')

plt.title('Prediction on test set')

plt.legend()

plt.show()

# Make plots

x = my_process(10000, 0.99, drift=0, displacement=0)

my_prediction(x, my_model, normalize=False, difference=False)

x = my_process(10000, 0.99, drift=0, displacement=10)

my_prediction(x, my_model, normalize=False, difference=False)

x = my_process(10000, 0.99, drift=0, displacement=10)

my_prediction(x, my_model, normalize=True, difference=False)

x = my_process(10000, 0.99, drift=0.00001, displacement=10)

my_prediction(x, my_model, normalize=True, difference=False)

x = my_process(10000, 0.99, drift=0.00001, displacement=10)

my_prediction(x, my_model, normalize=True, difference=True)