Given this test data:

import pandas as pd

import numpy as np

data = {'date': ['2014-05-01 18:47:05.069722', '2014-05-01 18:47:05.119994', '2014-05-02 18:47:05.178768', '2014-05-02 18:47:05.230071', '2014-05-02 18:47:05.230071', '2014-05-02 18:47:05.280592', '2014-05-03 18:47:05.332662', '2014-05-03 18:47:05.385109', '2014-05-04 18:47:05.436523', '2014-05-04 18:47:05.486877'],

'battle_deaths': [34, 25, 26, 15, 15, 14, 26, 25, 62, 41], 'prisioners': [3, 4, 3, 2, 2, 6, 4, 5, 2, 8]}

df = pd.DataFrame(data, columns = ['date', 'battle_deaths', 'prisioners'])

Set index datetime:

df = df.set_index(pd.DatetimeIndex(df['date']), drop=True)

del df['date']

It works when I want to resample to the milliseconds, but it takes too long...

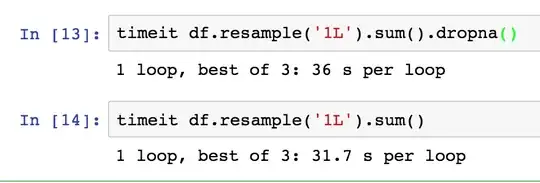

timeit df.resample('1L').sum()

I guess is because is aggregating all the milliseconds with NaN data, but when I drop it ..

timeit df.resample('1L').sum().dropna()

It takes even longer

guessing again that the dropna is done at the end... There is any way which by dropping the NaN samples will accelerate the process?