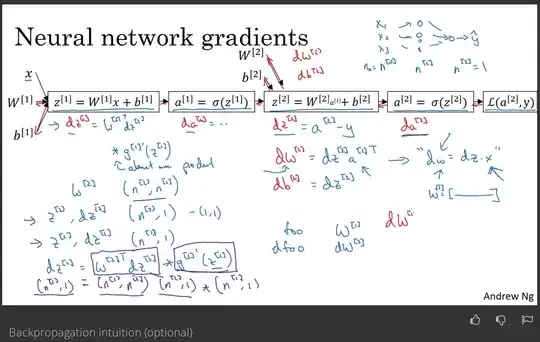

Why would the dimension of $w^{[2]}$ be $(n^{[2]}, n^{[1]})$ ?

This is a simple linear equation, $z^{[n]}= W^{[n]}a^{[n-1]} + b^{[n]}$

There seems to be an error in the screenshot. the weight, $W$ should be transposed, please correct me if I am wrong.

$W^{[2]}$ are the weights assigned to the neurons in the layer 2

$n^{[1]}$ is the number of neurons in layer 1

Screenshot from Andrew Ng deeplearning coursera course video: