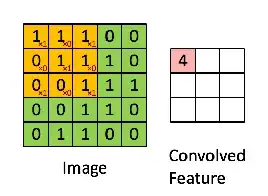

Building on @JahKnows theoretical answer, here is what the weights of Conv2D look like in action.

from keras import *

from keras.layers.convolutional import Conv2D

model = Sequential()

model.add(Conv2D(12, kernel_size=3, input_shape=(25, 25, 1)))

#just initialized, not fit to any data.

>>> weights[0].shape

'(3, 3, 1, 12)'

So a 3x3 matrix (9 arrays) where each array is a (1x12) vector

- First 2 dimensions: looks like the kernel size; (3,3).

- Last 2 dimensions: 1*12; where 12 is units and 1 is channels aka colors from the input_shape.

Plus 12 bias neurons:

- It also looks like there is a separate

weights[1] 12x1 for bias that would edge into each of the other 12x1s.

>>> weights[0]

array([[[[-0.22489263, 0.11462553, 0.1275196 , 0.19356592,

-0.06204098, 0.10875972, -0.09088454, 0.12002607,

0.14580582, -0.10627564, 0.04845475, 0.16762014]],

[[-0.00685272, -0.144605 , 0.00162746, -0.17116429,

-0.13180375, -0.13356137, 0.02543293, 0.09918924,

0.19696428, -0.01112208, -0.17443556, 0.105253 ]],

[[ 0.04283331, 0.1003729 , -0.21573427, -0.08311893,

-0.0144719 , 0.10843249, -0.1036434 , 0.1704862 ,

0.22398098, -0.2159951 , 0.13356568, -0.13963732]]],

[[[-0.00911894, 0.12489821, -0.1453647 , 0.14670904,

0.17318939, -0.16027464, -0.11050612, -0.19118567,

0.06857748, 0.18323778, -0.22046578, 0.05927287]],

[[-0.00602703, -0.18062721, 0.15344848, -0.15143515,

-0.07210657, 0.177676 , -0.06143558, -0.17020151,

-0.02092001, 0.19398673, -0.20247248, 0.17286496]],

[[ 0.22057424, 0.10987107, 0.00975977, 0.00445287,

0.09941946, 0.03192849, -0.19070472, -0.10779155,

0.13622199, -0.11289301, -0.06379397, 0.06102996]]],

[[[-0.11758636, 0.16921164, -0.151184 , -0.06386189,

0.1991932 , -0.21000272, -0.12173925, -0.03071272,

0.16692607, -0.12708151, 0.08756261, 0.178169 ]],

[[-0.05779965, -0.10117687, 0.20407595, -0.21241538,

-0.16404435, -0.0826612 , 0.02122533, 0.1947081 ,

-0.09203622, 0.08905725, 0.09665458, -0.06724563]],

[[-0.22078277, -0.0093862 , 0.02477093, -0.0090203 ,

0.21535213, -0.16004324, -0.0708347 , -0.02972263,

0.11906733, 0.05814315, -0.02641977, -0.09178646]]]],

dtype=float32)

>>> weights[1]

array([0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.], dtype=float32)

```