I'm starting a project where the task is to identify sneaker types from images. I'm currently reading into TensorFlow and Torch implementations. My question is: how many images per class are required to reach a reasonable classification performance?

2 Answers

From How few training examples is too few when training a neural network? on CV:

It really depends on your dataset, and network architecture. One rule of thumb I have read (2) was a few thousand samples per class for the neural network to start to perform very well. In practice, people try and see.

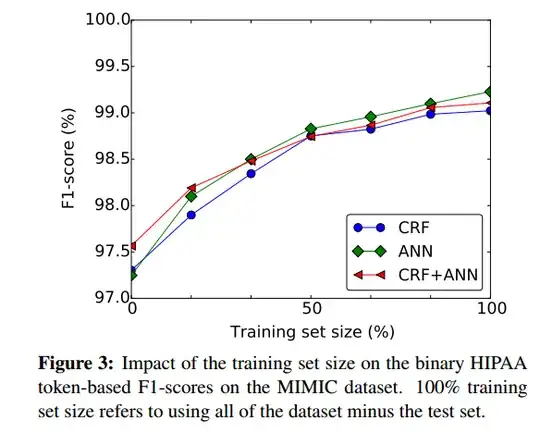

A good way to roughly assess to what extent it could be beneficial to have more training samples is to plot the performance of the neural network based against the size of the training set, e.g. from (1):

- (1) Dernoncourt, Franck, Ji Young Lee, Ozlem Uzuner, and Peter Szolovits. "De-identification of Patient Notes with Recurrent Neural Networks" arXiv preprint arXiv:1606.03475 (2016).

(2) Cireşan, Dan C., Ueli Meier, and Jürgen Schmidhuber. "Transfer learning for Latin and Chinese characters with deep neural networks." In The 2012 International Joint Conference on Neural Networks (IJCNN), pp. 1-6. IEEE, 2012. https://scholar.google.com/scholar?cluster=7452424507909578812&hl=en&as_sdt=0,22 ; http://people.idsia.ch/~ciresan/data/ijcnn2012_v9.pdf:

For classification tasks with a few thousand samples per class, the benefit of (unsupervised or supervised) pretraining is not easy to demonstrate.

- 5,862

- 12

- 44

- 80

The best approach is to collect as much data as you comfortably can. Then get started with the project and make a data model.

Now you can evaluate your model to see if it has High Bias or High Variance.

High Variance : In this situation you will see that Cross-Validation error is higher than Training error after convergence.There is a significant gap if you plot the same against training data size.

High Bias: In this situation Cross-Validation error is slightly higher than training error which itself is high when plotted against training data size.By plotting against training data size I mean ,you can input subsets of training data you have and keep incrementing subset size and plot errors.

If you see your model has high variance(overfit), adding more data will usually help in contrast to high bias(underfit) model where adding new training data doesn't help.

Also per class you must try to get same number of images otherwise datasets can become skewed(more of one kind).

Also I suggest if you are using TensorFlow ,read more about GOOGLE's INCEPTION Image Classifier. It is already trained classifier on google's image database and you can use it for your images, that way requirements for number of images comes down drastically.

- 71

- 4