I have collection of TEC data.My data sample for example the day1,day2,day3,day4.

Case1:

I have the following task to do: Training by the consecutive 3 days to predict the each 4th day. Each day data represents one CSV file which has dimension 24x25. Every datapoints of each CSV file are pixels.

Now, I need to do that, predict day4(means 4th day) by using training data day1, day2, day3(means three consecutive days), after then calculate mse between predicted day4 data and original day4 data. Let's call it mse1.

Similarly, I need to predict the day5 (means 5th day) by using training data day2, day3, day4, and then calculate the mse2(mse between predicted day5 data and original day5 data)

I need to predict day6(means 6th day)by using training data day3, day4, day5, and then calculate mse3(mse between predicted day6 data and original day6)

..........

And finally I want to Predict day93 by using training data day90, day91, day92,calculate mse90(mse between predicted day93 data and original day93)

I want to use in this case LSTM model. And we have 90 mse for lstm model.

Case2:

Here I am using, is known as "the" naive forecast, or a "random walk" forecast.

Naive approach is:

The guess for any day is simply the map of the previous day. I mean simply guess that day2 is the same as day1, guess that day3 is same as day2, guess that day4 is same as day3,....., guess that day91 is same as day90. I mean Predict next day's data using current day's data(predicted_data = current_day_data). Then calculate mse between next_day_data and current_day_data.

import os

import pandas as pd

import numpy as np

from sklearn.linear_model import LinearRegression, Ridge

from sklearn.preprocessing import MinMaxScaler

import matplotlib.pyplot as plt

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import LSTM, Dense

Paths

data_folder = r'C:\Users\alokj\OneDrive\Desktop\jupyter_proj\All_New_serialdata99_16'

output_folder = r'C:\Users\alokj\OneDrive\Desktop\jupyter_proj\90_days_merged'

Ensure the output folder exists

os.makedirs(output_folder, exist_ok=True)

List all CSV files in the folder

csv_files = [f for f in os.listdir(data_folder) if f.endswith('.csv')]

Sort the files based on the numeric part extracted from the filename

csv_files = sorted(csv_files, key=lambda x: int(x.split('Day')[1].split('')[0]))

Prepare data

data_list = [pd.read_csv(os.path.join(data_folder, file), header=None).values for file in csv_files]

data_array = np.array(data_list) # Shape: (num_days, 24, 25)

Flatten the data for easier handling in regression models

num_days, rows, cols = data_array.shape

data_flattened = data_array.reshape(num_days, -1) # Shape: (num_days, 600)

Prepare features and target matrix for range (3, num_days)

X = np.array([data_flattened[i-3:i].flatten() for i in range(3, num_days)]) # Shape: (num_days-3, 1800)

y = data_flattened[3:num_days] # Target is the 4th day in each sequence

Train-Test Split and Validation (Separate fixed split)

train_size = int(0.8 * len(X)) # 80% for training

print(train_size)

X_train = X[:train_size]

y_train = y[:train_size]

X_test = X[train_size:]

y_test = y[train_size:]

Scaling the data

scaler_X = MinMaxScaler()

scaler_X.fit(X_train) # Fit on training set

X_train_scaled = scaler_X.transform(X_train)

X_test_scaled = scaler_X.transform(X_test)

scaler_y = MinMaxScaler()

scaler_y.fit(y_train) # Fit on training set

y_train_scaled = scaler_y.transform(y_train)

y_test_scaled = scaler_y.transform(y_test)

XX = X_test_scaled[:90]

yy = y_test[:90] # Target for validation

LSTM Model

Reshape data for LSTM (num_samples, timesteps, features)

X_train_lstm = X_train_scaled.reshape(X_train_scaled.shape[0], 3, -1)

#y_train_lstm = y_train_scaled.reshape(y_train_scaled.shape[0], 3, -1)

X_test_lstm = X_test_scaled.reshape(X_test_scaled.shape[0], 3, -1)

XX_lstm = XX.reshape(XX.shape[0], 3, -1)

LSTM Model

lstm_model = Sequential([

LSTM(64, activation='tanh', return_sequences=False, input_shape=(3, X_train_lstm.shape[2])),

Dense(y_train_scaled.shape[1])

])

lstm_model.compile(optimizer='adam', loss='mse')

lstm_model.fit(X_train_lstm, y_train_scaled, epochs=20, batch_size=16, verbose=1)

Validation using LSTM

yy_pred_lstm = lstm_model.predict(XX_lstm)

yy_pred_lstm = scaler_y.inverse_transform(yy_pred_lstm)

Calculate residuals for LSTM

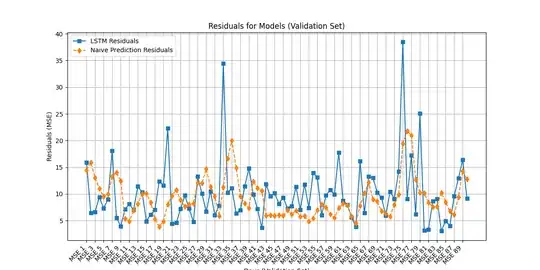

residuals_lstm = [np.mean((yy[i] - yy_pred_lstm[i])**2) for i in range(len(yy))]

Naive Prediction

residuals_naive = [np.mean((X_test[i] - X_test[i - 1]) ** 2) for i in range(1, 91)]

Plot residuals for all models

days = [f'Day {i+1}' for i in range(90)] # Start labels from Day 4 to Day 93

plt.figure(figsize=(12, 6))

plt.plot(days, residuals_lstm, label='LSTM Residuals', marker='s')

plt.plot(days, residuals_naive, label='Naive Prediction Residuals', marker='d', linestyle='--')

Configure plot

plt.xticks(ticks=range(0, len(days), 2), labels=[f'MSE {i+1}' for i in range(0, len(days), 2)], rotation=45, ha='right')

plt.xlabel('Days (Validation Set)')

plt.ylabel('Residuals (MSE)')

plt.title('Residuals for Models (Validation Set)')

plt.legend()

plt.grid(True)

Save and show plot

plt.savefig(os.path.join(output_folder, 'models_comparison_with_naive.png'))

plt.show()

I have read many research paper, and they are saying LSTM will be working well on TEC data, although not mentioned anything regarding naive method. And my output graph showing both LSTM and naive both are competitive. My question is, anybody review my specially lstm model code, any flaw inside the code that I am not aware of?