I am developing a neural network using Home credit Default Risk Dataset.

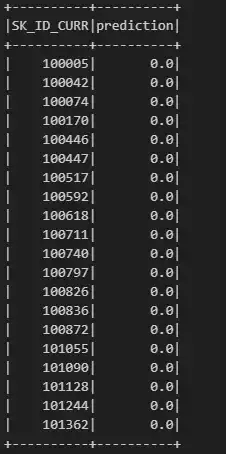

The prediction should be between 0.0 and 1.0 but my algorithm's outcome is just 0.0 for every row.

My Code

# Assuming final_train_df and final_test_df are already loaded and cleaned dataframes

categorical_columns = ['NAME_CONTRACT_TYPE', 'CODE_GENDER', 'FLAG_OWN_CAR', 'FLAG_OWN_REALTY',

'NAME_TYPE_SUITE', 'NAME_INCOME_TYPE', 'NAME_EDUCATION_TYPE',

'NAME_FAMILY_STATUS', 'NAME_HOUSING_TYPE', 'WEEKDAY_APPR_PROCESS_START', 'ORGANIZATION_TYPE']

StringIndexer and OneHotEncoder for categorical columns

indexers = [StringIndexer(inputCol=col, outputCol=col + '_index', handleInvalid='skip') for col in categorical_columns]

encoders = [OneHotEncoder(inputCol=col + '_index', outputCol=col + '_ohe') for col in categorical_columns]

Create a pipeline for encoding

pipeline = Pipeline(stages=indexers + encoders)

Fit and transform the training data

train_df = pipeline.fit(final_train_df).transform(final_train_df)

Transform the test data using the same fitted pipeline

test_df = pipeline.fit(final_train_df).transform(final_test_df)

Drop the original and indexed categorical columns

cols_to_drop = categorical_columns + [col + '_index' for col in categorical_columns]

train_df = train_df.drop(cols_to_drop)

test_df = test_df.drop(cols_to_drop)

Cast specific columns to double

columns_to_cast = ['AMT_REQ_CREDIT_BUREAU_HOUR', 'AMT_REQ_CREDIT_BUREAU_DAY', 'AMT_REQ_CREDIT_BUREAU_WEEK',

'AMT_REQ_CREDIT_BUREAU_MON', 'AMT_REQ_CREDIT_BUREAU_QRT', 'AMT_REQ_CREDIT_BUREAU_YEAR']

for col_name in columns_to_cast:

train_df = train_df.withColumn(col_name, col(col_name).cast("double"))

test_df = test_df.withColumn(col_name, col(col_name).cast("double"))

print("Encoding complete")

Assemble feature vector

ohe_columns = [col + '_ohe' for col in categorical_columns]

numerical_columns = [col for col in train_df.columns if col not in ohe_columns + ['SK_ID_CURR', 'TARGET']]

print(f"Numerical columns: {numerical_columns}")

print(f"One-hot encoded columns: {ohe_columns}")

Remove existing 'features' and 'scaled_features' columns if they exist

for col in ['features', 'scaled_features']:

if col in train_df.columns:

train_df = train_df.drop(col)

if col in test_df.columns:

test_df = test_df.drop(col)

print("assembling feature vector")

assembler = VectorAssembler(inputCols=numerical_columns + ohe_columns, outputCol="features")

train_df = assembler.transform(train_df)

test_df = assembler.transform(test_df)

print("Feature vector assembly complete.")

print("Scaling The features...")

scaler = StandardScaler(inputCol="features", outputCol="scaled_features")

scaler_model = scaler.fit(train_df)

train_df = scaler_model.transform(train_df)

test_df = scaler_model.transform(test_df)

print("Scaling complete.")

Neural Network Structure

layers = [

173, # Number of input features -> Check from the above Statement

64, # Hidden layer size

32, # Hidden layer size

2 # Number of classes

]

print(f"Neural network layers: {layers}")

print ("Initialization of Multilayer Perceptron Classifier")

mlp = MultilayerPerceptronClassifier(

featuresCol='scaled_features',

labelCol='TARGET',

maxIter=100,

layers=layers,

blockSize=128,

seed=1234

)

print("Training the model...")

mlp_model = mlp.fit(train_df)

print("Model training complete.")

print("Making predictions on the training set and checking for Overfitting/Underfitting...")

train_predictions = mlp_model.transform(train_df)

print("Evaluating the model...")

Evaluate the model

evaluator = BinaryClassificationEvaluator(labelCol='TARGET', rawPredictionCol='rawPrediction', metricName='areaUnderROC')

auc_train = evaluator.evaluate(train_predictions)

print(f'Training AUC: {auc_train}')

print("Making predictions on the test set...")

Make predictions on the test set

test_predictions = mlp_model.transform(test_df)

Show the predictions

test_predictions.select('SK_ID_CURR', 'prediction', 'probability').show()

print("Preparing the submission file...")

Prepare the submission file

submission = test_predictions.select('SK_ID_CURR', 'prediction')

submission.show()

Write the DataFrame to a temporary directory

temp_path = './temp_prediction'

submission.write.csv(temp_path, header=True)

combined_df = spark.read.csv(temp_path, header=True, inferSchema=True)

combined_df = combined_df.repartition(1)

final_path = './final_prediction.csv'

combined_df.write.csv(final_path, header=True)

print("Single CSV file saved.")

I have tried chanigng some parameters of the NN algorithm, and also i have detected some outliers and deleted them.

What could i check?