I am currently training an ANN using Sequential(a class from Keras API within tensorflow), and I am optimizing the model's architecture and came across something I have not seen before.

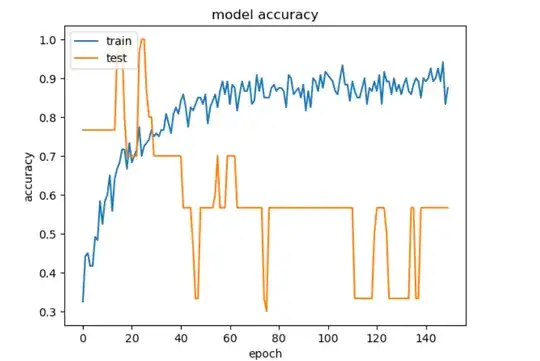

The graph of the test accuracy seems a bit odd. The graph appears that it is not a smooth curve, but different.

Model

import pandas as pd

from tensorflow import keras

from tensorflow.keras import layers

from sklearn.model_selection import train_test_split

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

features = data[['Vcom', 'T', 'Vair']]

labels = data[['COP', 'CC']]

features_train, features_test, labels_train, labels_test = train_test_split(features, labels, test_size=0.2, random_state=42)

from tensorflow.keras.layers import Dropout , BatchNormalization

from tensorflow.keras import regularizers

model = Sequential()

l2_regularizer = regularizers.l2(0.01)

model.add(Dense(64, activation='relu', input_shape=(3,), kernel_regularizer=l2_regularizer))

model.add(BatchNormalization())

model.add(Dropout(0.6))

model.add(Dense(32, activation='relu', kernel_regularizer=l2_regularizer))

model.add(BatchNormalization())

model.add(Dropout(0.6))

model.add(Dense(32, activation='relu'))

model.add(BatchNormalization())

model.add(Dense(2,)) # 2 output neurons for output1 and output2

model.compile(optimizer='adam', loss='mean_squared_error', metrics=['accuracy'])

model_history=model.fit(features_train, labels_train, epochs=150, batch_size=32, validation_data=(features_test, labels_test))

print(model_history.history.keys())

summarize history for accuracy

plt.plot(model_history.history['accuracy'])

plt.plot(model_history.history['val_accuracy'])

plt.title('model accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()

My question is:

Why the test accuracy showing odd patterns ?

How can I fix this problem?